How to do continuous integration and continuous delivery of APIs with WSO2 API Manager

WSO2 API Manager, the only Open Source Leader in API Management Solutions in Forrester Wave, packs in a wide range of advanced API Management features that covers a number of end user stories. Through customization introduced to the extension points available throughout the product, WSO2 API Manager can be adopted to almost all API Management scenarios imaginable.

An interesting scenario on API Management is how to perform Continuous Integration and Continuous Delivery of APIs. CI/CD, usually uttered with the same sentences having to do with programmable code management, is a more generalized concept when it comes to integrating API Management to DevOps culture.

Continuous Integration of APIs is how to seamlessly automate the API creation and update processes with minimal intervention of the Ops personnel. Continuous Delivery of APIs is how to promote these APIs from lower environments to subsequently pre-production (or if you are adventurous and well automated, to production) systems with minimal intervention of the Ops personnel.

Continuous Integration

Automation of API creation is an open ended scenario. Each story may have different parameters and limitations introduced by business requirements that will have to be tackled per story.

However, each implementation will be generally similar on how different layers of the automation will interact. There will be a some kind of an input method of API creation requests, that will in turn trigger a job on an automation tool. The job will analyze the submitted API creation requests and make the necessary API Management API calls to WSO2 API Manager.

For the sake of simplification and restricting a potentially open ended discussion to a narrow set of requirements, let’s assume the following story.

The API requesting party will submit a structured request (which is both human and machine readable) to create a new API to a VCS (e.g. a Git repository). This will trigger a build job on Jenkins (i.e. the automation tool) that will analyze the submitted structured request and make HTTP calls to WSO2 API Manager Publisher REST APIs, to create new APIs.

During this API creation, the number of inputs expected of the requesting party is something that can vary based on the story. For an example, some stories may only require a few metadata like name, context, and description whereas other stories may demand more details like backend URLs, tagging metadata, resource configuration, etc. It’s the responsibility of the party who designs this process to come up with the level of details required in the process initiation and codify it to a proper structured request format.

For an example, a minimal API creation request could look like the following JSON document.

{

"username": "Username of the user",

"department": "Department of the user",

"apis": [

{

"name": "SampleAPI_dev1",

"description": "Sample API description",

"context": "/api/dev1/sample",

"version": "v1"

}

]

}

The JSON-Schema for this is as follows.

{

"$schema": "http://json-schema.org/draft-04/schema#",

"type": "object",

"properties": {

"username": {

"type": "string"

},

"department": {

"type": "string"

},

"apis": {

"type": "array",

"items": [

{

"type": "object",

"properties": {

"name": {

"type": "string"

},

"description": {

"type": "string"

},

"context": {

"type": "string"

},

"version": {

"type": "string"

}

},

"required": [

"name",

"description",

"context",

"version"

]

}

]

}

},

"required": [

"username",

"department",

"apis"

]

}

Since this example only takes the minimal parameters for an API creation, the APIs will be created with the status CREATED which enables to submit minimal data in the creation request.

It’s the job of the automation tool to make sense of this request format and perform the necessary actions. I have implemented a sample Python script that does this (and performs Continuous Delivery in the next section) and uploaded the code to Github. This script can be invoked in the automation tool (e.g. as a Shell Task in Jenkins) during a job that is triggered by a post-commit hook from a Git repository that contains the API requests.

Let’s briefly go over the Python code to understand the basic implementation of a CI flow.

First task is to parse the JSON requests so that each API request can be made.’

for api_request_file in glob.glob("api_definitions/*_apis.json"):

request_json_content = json.loads(open(api_request_file).read())

requesting_user = request_json_content["username"]

print "Parsing create requests for [user] %s [department] %s [requests] %s..." % (

requesting_user, request_json_content["department"], len(request_json_content["apis"]))

The JSON requests are contained as files inside a particular folder, api_definintions . The reason why JSON was selected as the DSL for this PoC is clear here. Python support for JSON is brilliant.

The parsed data then have to be converted to API create requests. But before that can happen, the existence of each API in the environment has to be checked. If an API CREATE request is made for an existing API (matching name, version, and context), the REST API will return an error. In this case, there should not be any CREATErequests.

There is another edge case where the API already exists by name and context but not by version. In this case, the request should be to create a new version of the existing API.

# If there is no matching API, an API create request is done.

# If a matching API is there, but no matching version, a version would be added

# If both a matching API with a matching version exists, the API would not be created.

for api_request in request_json_content["apis"]:

api_name_exists, api_id = api_utils.api_name_exists(api_request["name"], apimgt_url, access_token,

verify_ssl)

if api_name_exists:

# Check if the same version exists

api_version_exists, _ = api_utils.api_version_exists(api_request["name"], api_request["version"],

apimgt_url, access_token, verify_ssl)

if api_version_exists:

# Do not create the same version

print "\t%s:%s \t :Exists. Skipping..." % (api_request["name"], api_request["version"])

continue

else:

# Add as a new version

print "\t%s:%s \t :Adding version..." % (api_request["name"], api_request["version"])

api_utils.add_api_version(api_id, api_request["version"], apimgt_url, access_token, verify_ssl)

else:

# Create API

print "\t%s:%s \t :Creating..." % (api_request["name"], api_request["version"])

# build create request

# ....

# ....

# ....

successful = api_utils.create_api(create_req_body, apimgt_url, access_token, verify_ssl)

The complete script can be found in the Github Repository. There are additional logic that involves loading configuration parameters, obtains access tokens, and handles errors.

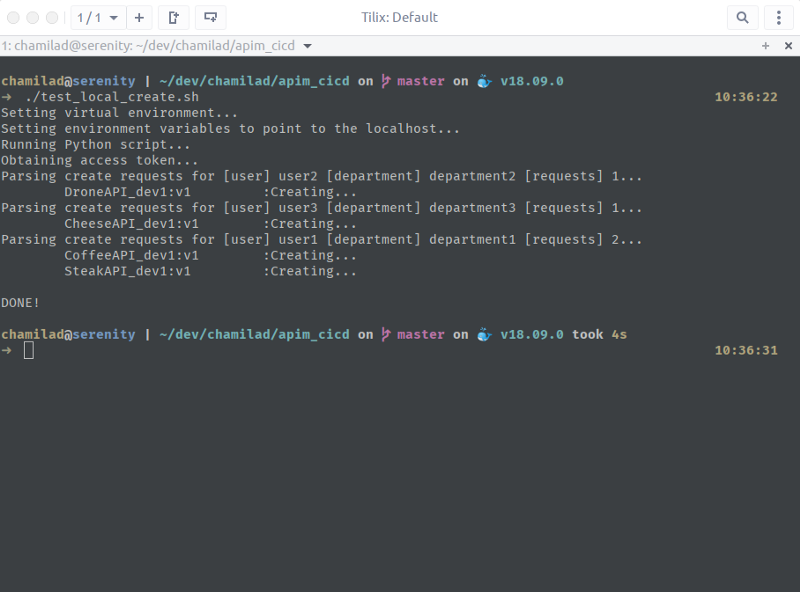

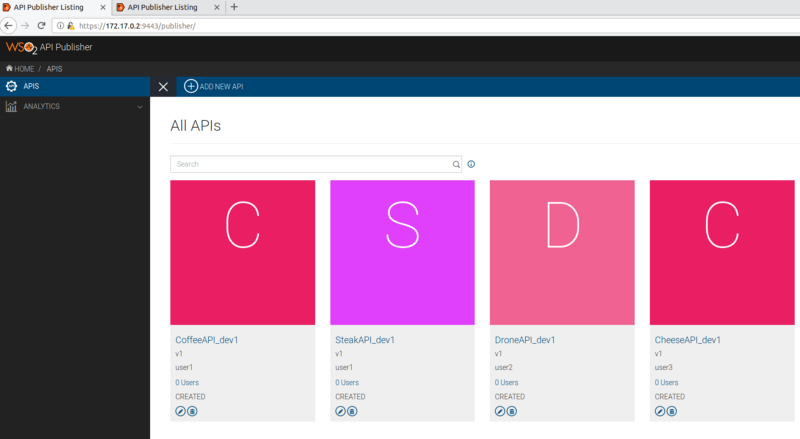

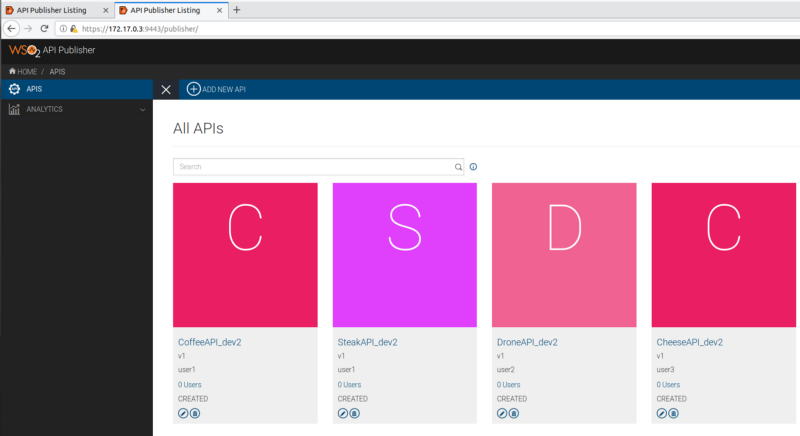

This script would turn the requests contained in these JSON files to the following APIs in CREATED state in WSO2 API Manager Publisher.

It’s the responsibility of the implementation party to add necessary implementation details and publish the APIs.

In a different story, where all the required details are obtained in the API creation request, the script itself can create the APIs in the

PUBLISHEDstate. This would eliminate the need for end users to get involved after the APIs are created, but it will have to put faith in the correctness of the API requests created by the end users.

Continuous Delivery

Continuous Delivery of APIs into production, promoting them from lower level environments with proper testing a well designed dream to have. Once the APIs are created in the lowest environment, there can be a periodic or manually triggered automation job that reads API details from one environment, create or update them in the next environment, and ideally run API tests on the higher level environment that make sure no contract is broken by the changes introduced.

In the PoC scripts in my Github Repository, the API read and propagation step is implemented as another Python script. Given the details of the two environments, it will iterate through each API read from the lower level environment, check if it exists in the upper level environment, and do a CREATE or UPDATE request as needed.

# 1. Get list of APIs from env1

print "Getting the list of APIs from ENV1..."

all_apis_in_env1 = api_utils.get_all_apis(env1_apimgt_url, env1_access_token, verify_ssl)

#...

#...

#...

# 2. Iterate through APIs and check if exists in env2

print "Checking for API status in ENV2..."

for api_to_propagate in all_apis_in_env1["list"]:

#...

# get the api definition from env 1

api_definition_env1 = api_utils.get_api_by_id(api_to_propagate["id"], env1_apimgt_url, env1_access_token,

verify_ssl)

#...

#...

# check if API exists in env2

api_exists_in_env2, api_id = api_utils.api_version_exists(api_definition_env1["name"],

api_definition_env1["version"],

env2_apimgt_url, env2_access_token, verify_ssl)

if api_exists_in_env2:

# 3. If exists, update API

api_definition_env1["id"] = api_id

print "Updating API %s in ENV2..." % api_definition_env1["name"]

api_utils.update_api(api_definition_env1, env2_apimgt_url, env2_access_token, verify_ssl)

else:

# 4 If doesn't exist, create API in env2

print "Creating API %s in ENV2..." % api_definition_env1["name"]

api_utils.create_api(api_definition_env1, env2_apimgt_url, env2_access_token, verify_ssl)

The logic here is straightforward.

- The list of APIs is retrieved from ENV1

- For each API retrieved, the API definition is also retrieved from ENV1

- For each API, it’s existence is checked in ENV2, and based on the result, a CREATEor UPDATE request is made to ENV2

For the sake of simplicity, existence of different versions of the same API is ignored here as a possibility.

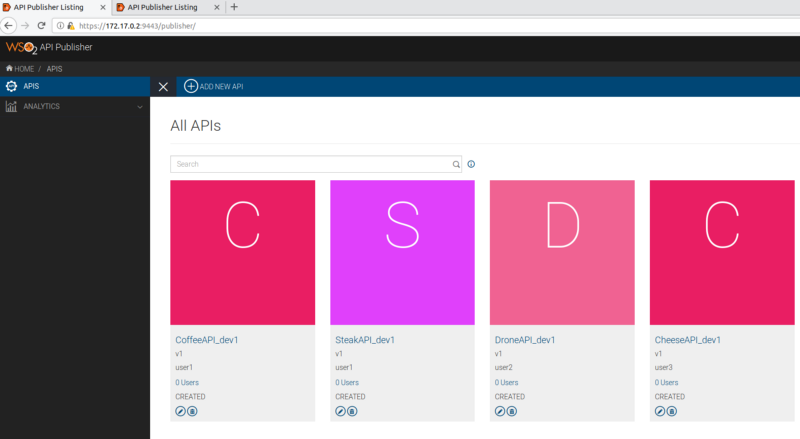

As a demonstration of mutability of API information during this migration, API name, context, and backend URL has been modified to contain environment specific details.

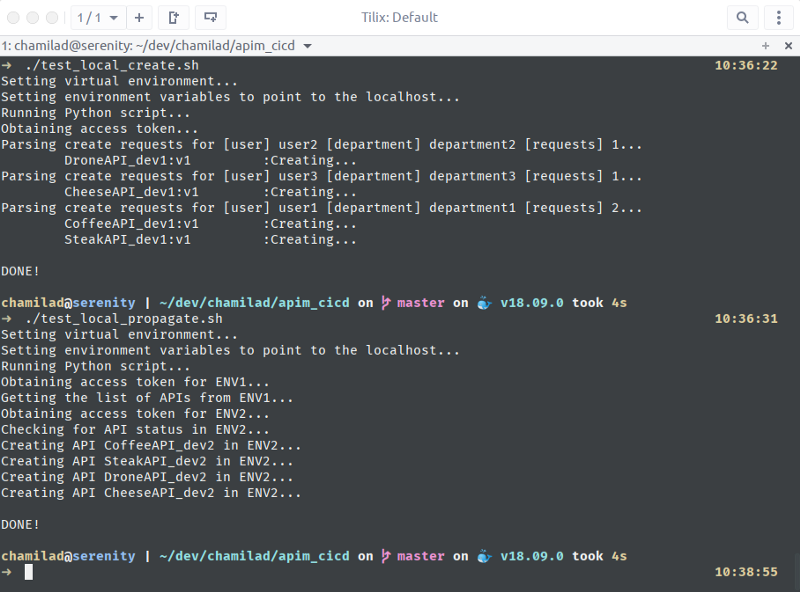

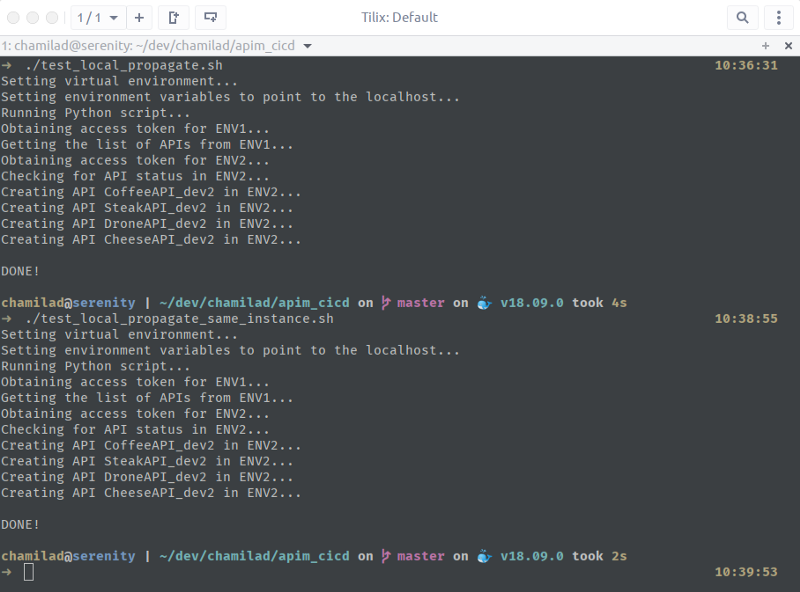

The execution of this script will result in an output similar to the following in the two environments.

This script could be part of an automation pipeline (e.g. Jenkins Pipeline) where the next step is the execution of a set of API tests. The API tests make sure the API contracts are not broken and will result in delivery confidence. This is crucial for a Continuous Delivery process. Otherwise the tendency will be towards manual intervention that halts seamless integration of the changes through the environments towards production, because there is not enough trust on the process to not break the APIs. This API test can be something simple as a JMeter or SOAPUI test suite that makes API specific calls to verify responses received. Without such a testing phase, the APIs should never be automatically pushed to production. Doing so may result in frequent API changes experienced by the consumers that will reduce the confidence they have on your APIs.

What about Import/Export?

The traditional approach to API migration between deployments is the WSO2 API Manager Import/Export Tool. However, this tool does not handle the create/update granularity for each API successfully, as it assumes APIs to not exist in the new environment. Import/Export handles APIs in bulk. In contrast, Continuous Delivery might have to consider a granularity at each API. Therefore, standard WSO2 API Manager Publisher REST APIs are used in the above CI/CD process implementation.

Use of the PoC Scripts

The PoC scripts in the Github repository may not be directly usable in all CI/CD stories, because of the assumptions made in the implementation process. However, by changing the Python scripts they can be adopted to suite a majority of the scenarios.

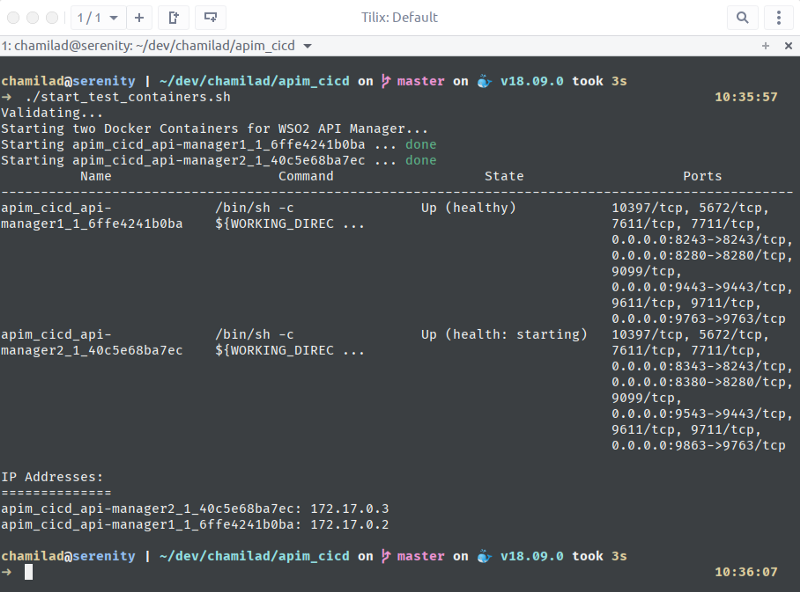

To make it easy to try out the scripts, a Docker Compose setup has been provided. This docker-compose.yml setup contains two WSO2 API Manager 2.6.0 Containers that will be mapped to ports 9443/8243 (api-manager1) and 9543/8343 (api-manager2) respectively.

To start this deployment, run start_test_containers.sh script. This will in essence run docker-compose up and output the IP addresses of the Containers.

The Containers are networked as bridged to the default Docker bridge on the Host, and therefore will share IP addresses from the range

172.17.0.1/16

Once the testing is done, the deployment can be torn down with the script stop_test_containers.sh.

The Python scripts take the required parameters as environment variables. Therefore, any automation tool that executes these scripts should inject the environment variables with the proper values during execution. For an example, Jenkins can make use of EnvInject plugin to load environment variabels from a pre-populated properties file for a build job.

The environment variables required for each script are as follows.

- API Manager URL —

WSO2_APIM_APIMGT_URL - Gateway URL —

WSO2_APIM_GW_URL - API Manager Username —

WSO2_APIM_APIMGT_USERNAME - API Manager Password —

WSO2_APIM_APIMGT_PASSWD - Verify SSL flag —

WSO2_APIM_VERIFY_SSL - Production Backend URL —

WSO2_APIM_BE_PROD - Sandbox Backend URL —

WSO2_APIM_BE_SNDBX - API Status —

WSO2_APIM_API_STATUS

- ENV1 API Manager URL —

WSO2_APIM_ENV1_APIMGT_URL - ENV1 Gateway URL —

WSO2_APIM_ENV1_GW_URL - ENV1 API Manager Username —

WSO2_APIM_ENV1_APIMGT_USERNAME - ENV1 API Manager Password —

WSO2_APIM_ENV1_APIMGT_PASSWD - ENV1 Identifier —

WSO2_APIM_ENV1_ID - ENV2 API Manager URL —

WSO2_APIM_ENV2_APIMGT_URL - ENV2 Gateway URL —

WSO2_APIM_ENV2_GW_URL - ENV2 API Manager Username —

WSO2_APIM_ENV2_APIMGT_USERNAME - ENV2 API Manager Password —

WSO2_APIM_ENV2_APIMGT_PASSWD - ENV2 Identifier —

WSO2_APIM_ENV2_ID - Verify SSL flag —

WSO2_APIM_VERIFY_SSL

To run the Python scripts by setting the required environment variables, the test_local_*.sh scripts can be used.

test_local_create.sh- This script runs theapi_create.pyscript pointing the script to the local setup atlocalhost:9443(172.17.0.1refers to the default Docker bridge network itself, thuslocalhost.)test_local_propagate.sh- This script runs theapi_propagate.pyscript, pointed to two API Manager deployments on localhost, the second one with an offset of100, i.e.172.17.0.1:9443and172.17.0.1:9543test_local_propagate_same_instance.sh- This does the same as #2 above, but with both environments pointing to the same API Manager deployment.

Simply start the Docker Containers as mentioned above, and execute scripts test_local_create.sh, test_local_propagate.sh, and test_local_propagate_same_instance.sh in that order. You can visit the pages ENV1 Publisher and ENV2 Publisher to see the APIs getting created as the scripts are executed.

Conclusion

There are various approaches when it comes to adopting CI/CD for APIs with WSO2 API Manager. With the flexibility of the Publisher APIs, almost any unique set of requirements could be satisfied with proper design and implementation. Though the above explained PoC makes use of Jenkins and Python, I have seen deployments that make use of Chef and private Git repositories along with structured email based request to automate the CI/CD process. This existence of different implementations of the same concept is evidence of how flexible of a product WSO2 API Manager is when enabling proper DevOps processes.

Written on November 25, 2018 by chamila de alwis.

Originally published on Medium