Kothmale Valley view near Ramboda

Following up from the last two1 blog posts2 on AWS Backup, this post focuses on a technical implementation of a simple backup strategy implementation for AWS Organizations.

Let’s extend the last two posts on AWS Backup to an example.

In this scenario, we have an AWS Organization with three accounts, management,

production (prod), and central backup (backup). There is a DynamoDB table

named tracking and an RDS MySQL instance named mydb that contains critical

production data in the prod account. Backing up data with AWS Backup needs to

be set up for these two databases.

The Terraform code for this setup is at this Github repository.

Pre-requisites

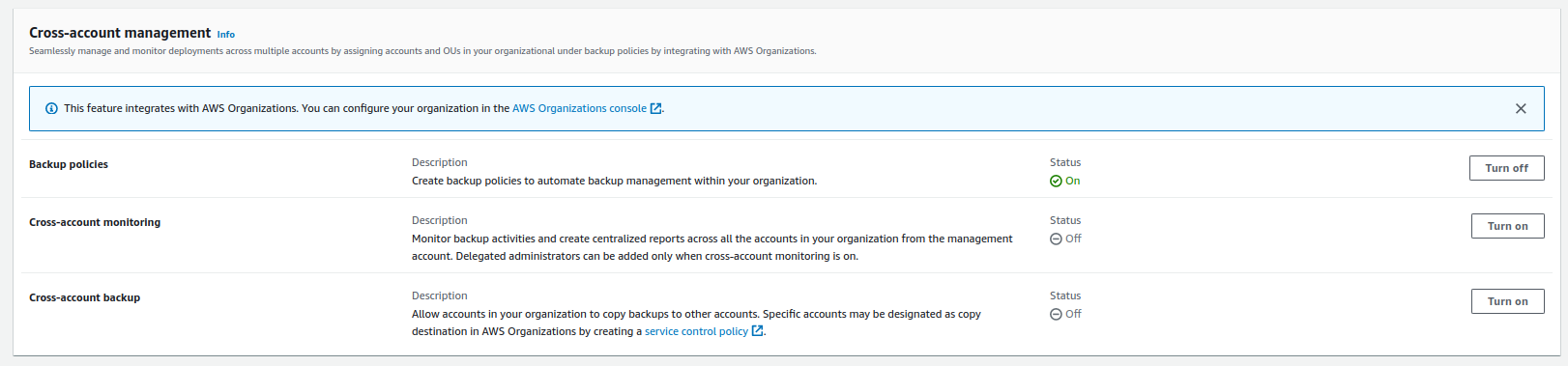

Before we can start writing a Backup Plan, we should enable a few things at the

Organization level. To do this, log in to the management account with

management account credentials, and navigate to AWS Backup console. In the

settings view, scroll down to the Cross-account management section and

turn on Backup Policies, Cross-account monitoring, and Cross-account

backup. Additionally, the backup account can be named a delegated

administrator for AWS Backup, however for this scenario we can keep the

management account as the sole administrator.

It may seem natural to mark the

backupaccount as a delegated backup administrator, however it should be considered with the context. If the solution only requires a network boundary based barrier for offsite backups,backupaccount should not be a backup administrator. Making it so brings in the additional overhead of limiting user permissions of users assigned to it so that their role separation is clearer. On the other hand, if your solution’s user model has a separate backup administrator, delegated backup administration account makes more sense.

Set up Central Backup Account

To perform cross-account backups, a Backup Vault is created in the central

backup account. This is encrypted with a CMK although you don’t really need a

CMK in this scenario as explained in the last post.

resource "aws_backup_vault" "central" {

name = "prod"

kms_key_arn = aws_kms_key.central_vault.arn

}

A Backup Vault Policy is also specified which allows other accounts in the AWS Organization to copy snapshots into the destination vault.

resource "aws_backup_vault_policy" "central" {

backup_vault_name = aws_backup_vault.central.name

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Allow all accounts under the Organisation to copy into central backup account",

"Effect": "Allow",

"Action": "backup:CopyIntoBackupVault",

"Resource": "*",

"Principal": "*",

"Condition": {

"StringEquals": {

"aws:PrincipalOrgID": [

"${data.aws_organizations_organization.current.id}"

]

}

}

}

]

}

POLICY

}

That is essentially all to be done for the central account.

Set up Source Account databases

As discussed in the last post, DynamoDB and RDS instances behave differently when working with AWS Backup. For the cross-account scenario to function properly they need to be set up with encryption in a specific way.

For this scenario, we specify a single KMS CMK named critical_data. This key

is supposed to encrypt all business critical data in our solution in

production. The key policy for this key is modified so that it can be used for

RDS instances in conjunction with AWS Backup. This modification includes

allowing Source and Central Account Backup to use the key for encryption and

decryption.

statement {

sid = "Allow source account to take backups of resources that don't support independent encryption"

effect = "Allow"

principals {

type = "AWS"

identifiers = [

aws_iam_role.backup_rds.arn,

]

}

actions = [

"kms:GenerateDataKey",

"kms:DescribeKey",

"kms:Decrypt",

"kms:Encrypt",

"kms:CreateGrant",

]

resources = ["*"]

}

statement {

sid = "Allow destination account AWS Backup to copy snapshots made from this key, for resources that don't support independent encryption"

effect = "Allow"

principals {

type = "AWS"

identifiers = [

var.destination_backup_service_linked_role_arn,

]

}

actions = [

"kms:GenerateDataKey",

"kms:DescribeKey",

"kms:Decrypt",

"kms:CreateGrant",

]

resources = ["*"]

}

We also need to set up Advanced Features for DynamoDB backups in the Backup service. This can be done in the console by navigating to the Source Account Backup console Settings view and enabling the feature. The terraform module handles this through the API.

resource "aws_backup_region_settings" "settings" {

resource_type_opt_in_preference = {

"Aurora" = false

"DocumentDB" = false

"DynamoDB" = true

"EBS" = true

"EC2" = true

"EFS" = true

"FSx" = false

"Neptune" = false

"RDS" = true

"Storage Gateway" = false

"VirtualMachine" = false

"Redshift" = false

"Timestream" = false

"CloudFormation" = false

"S3" = false

}

# Enable advanced features for dynamodb backups

resource_type_management_preference = {

"DynamoDB" = true

"EFS" = true

}

}

We then set up a simple DynamoDB table and an RDS instance. The contents of these instances are out of scope for this exercise.

resource "aws_dynamodb_table" "tracking" {

name = "tracking"

billing_mode = "PAY_PER_REQUEST"

hash_key = "cust_id"

range_key = "location"

server_side_encryption {

enabled = true

kms_key_arn = aws_kms_key.critical_data.arn

}

attribute {

name = "cust_id"

type = "S"

}

attribute {

name = "location"

type = "S"

}

}

resource "aws_db_instance" "mydb" {

allocated_storage = 10

db_name = "mydb"

identifier = "mydb"

engine = "mysql"

engine_version = "5.7"

instance_class = "db.t3.micro"

username = "foo"

password = "foobarbaz"

parameter_group_name = "default.mysql5.7"

skip_final_snapshot = true

kms_key_id = aws_kms_key.critical_data.arn

storage_encrypted = true

}

The Backup Vault for the Source Account can then be set up.

resource "aws_backup_vault" "source" {

name = "prod"

kms_key_arn = aws_kms_key.source_vault.arn

}

resource "aws_backup_vault_policy" "source" {

backup_vault_name = aws_backup_vault.source.name

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Allow all accounts under the Organisation to copy into central backup account",

"Effect": "Allow",

"Action": "backup:CopyIntoBackupVault",

"Resource": "*",

"Principal": "*",

"Condition": {

"StringEquals": {

"aws:PrincipalOrgID": [

"${data.aws_organizations_organization.current.id}"

]

}

}

}

]

}

POLICY

}

Note that, the vault itself is encrypted with a separate CMK.

Write Backup Plans

With the infrastructure set up, the Backup Plans can then be written for each database.

For the example scenario, a plan with a rule that takes hourly backups is designed, so that troubleshooting the process can be quicker.

Separate IAM Roles for DynamoDB and RDS to perform backups are created. However at this point, the permission policy for both roles look the same, as the AWS Managed Policies are directly attached to each of them for ease of use.

resource "aws_iam_role" "backup_dynamodb" {

name = "backup_dynamodb"

assume_role_policy = jsonencode({

"Version" : "2012-10-17",

"Statement" : [

{

"Effect" : "Allow",

"Principal" : {

"Service" : "backup.amazonaws.com"

},

"Action" : "sts:AssumeRole"

}

]

})

managed_policy_arns = [

"arn:aws:iam::aws:policy/service-role/AWSBackupServiceRolePolicyForBackup",

"arn:aws:iam::aws:policy/service-role/AWSBackupServiceRolePolicyForRestores"

]

}

The Backup plan for DynamoDB takes hourly backups, starting from 0500UTC. The resulting snapshots are copied into the central Backup Account. The retention period of the recovery points are set to 30 days.

resource "aws_backup_plan" "dynamodb" {

name = "dynamodb"

rule {

rule_name = "dynamodb_hourly"

schedule = "cron(0 5/1 ? * * *)"

target_vault_name = aws_backup_vault.source.name

start_window = 480

completion_window = 10080

lifecycle {

delete_after = 30

}

copy_action {

destination_vault_arn = var.destination_vault_arn

lifecycle {

delete_after = 30

}

}

}

}

With the backup rule in place, we then include the DynamoDB table by ARN into the Backup Plan.

resource "aws_backup_selection" "dynamodb_tracking" {

iam_role_arn = aws_iam_role.backup_dynamodb.arn

name = "dynamodb-tracking"

plan_id = aws_backup_plan.dynamodb.id

resources = [

aws_dynamodb_table.tracking.arn

]

}

Virtually the same is done for the RDS instance, where a Backup Plan with the

same backup rule is defined, and the mydb instance is associated with the

plan directly by ARN.

Results

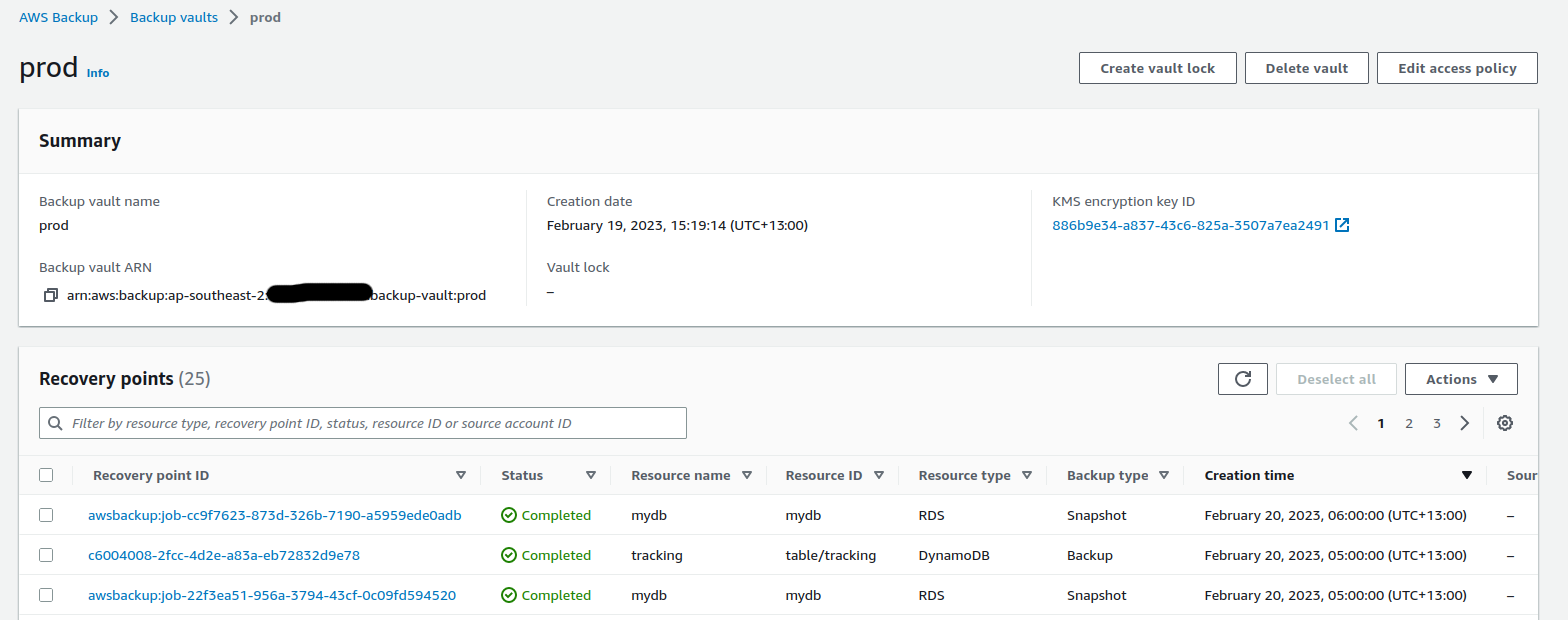

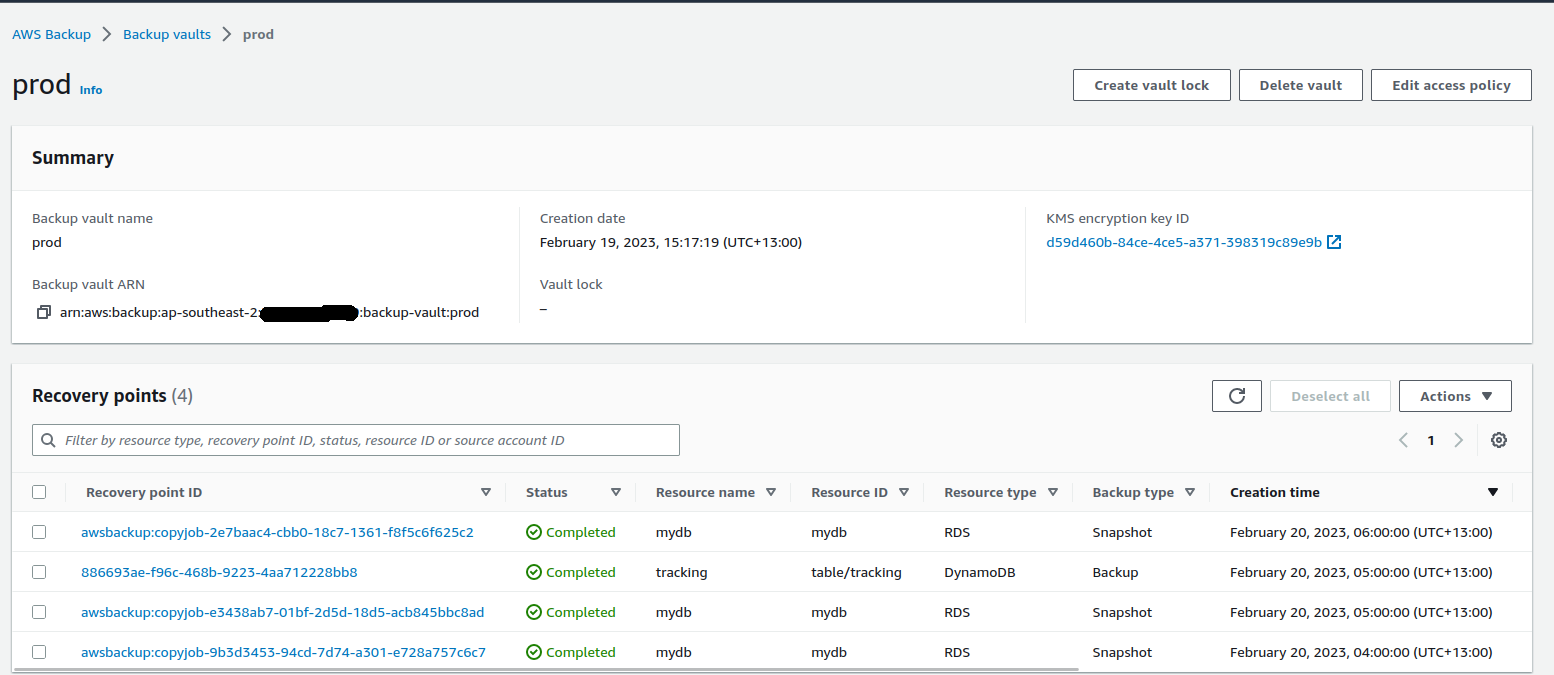

The backup jobs start getting scheduled on an hourly basis for each rule that was defined above. Once completed, the source vault starts showing the recovery points created for each of the resources associated with the plan.

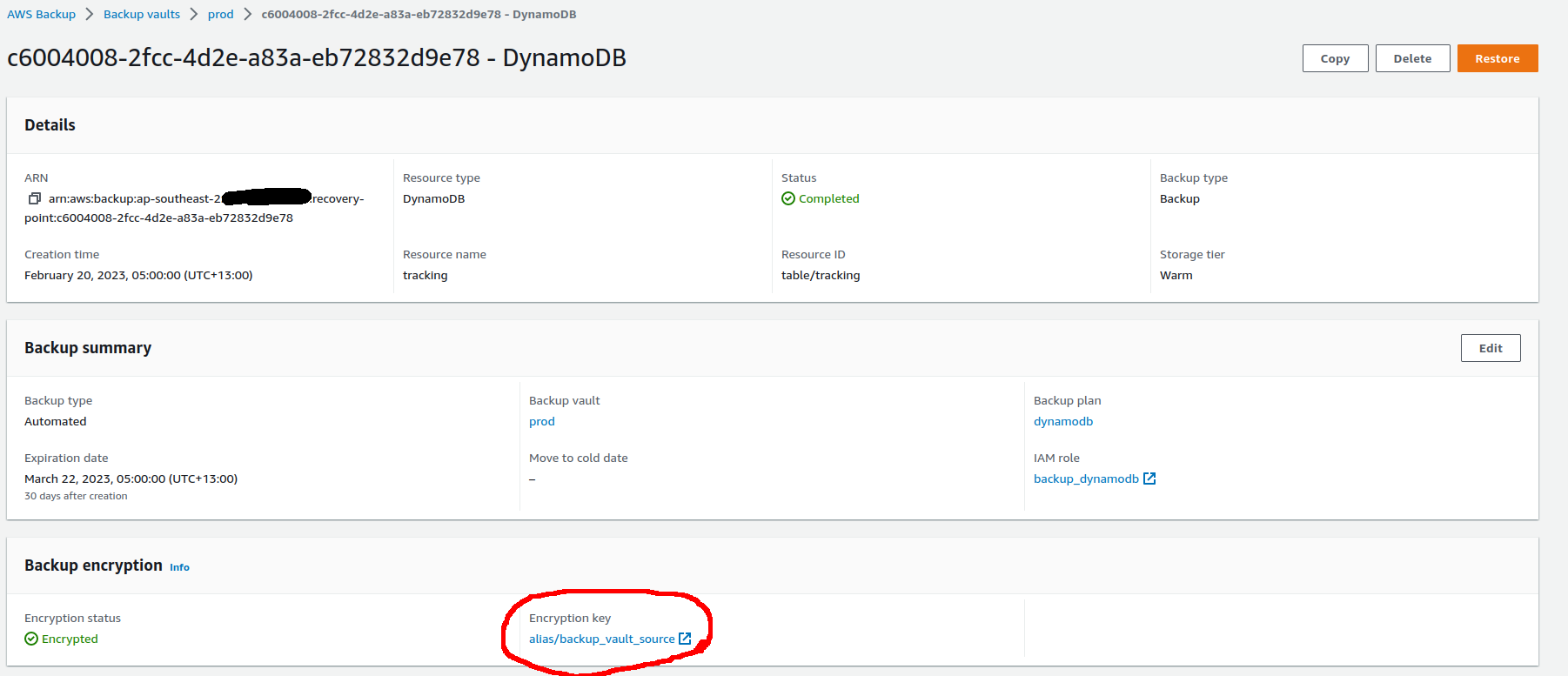

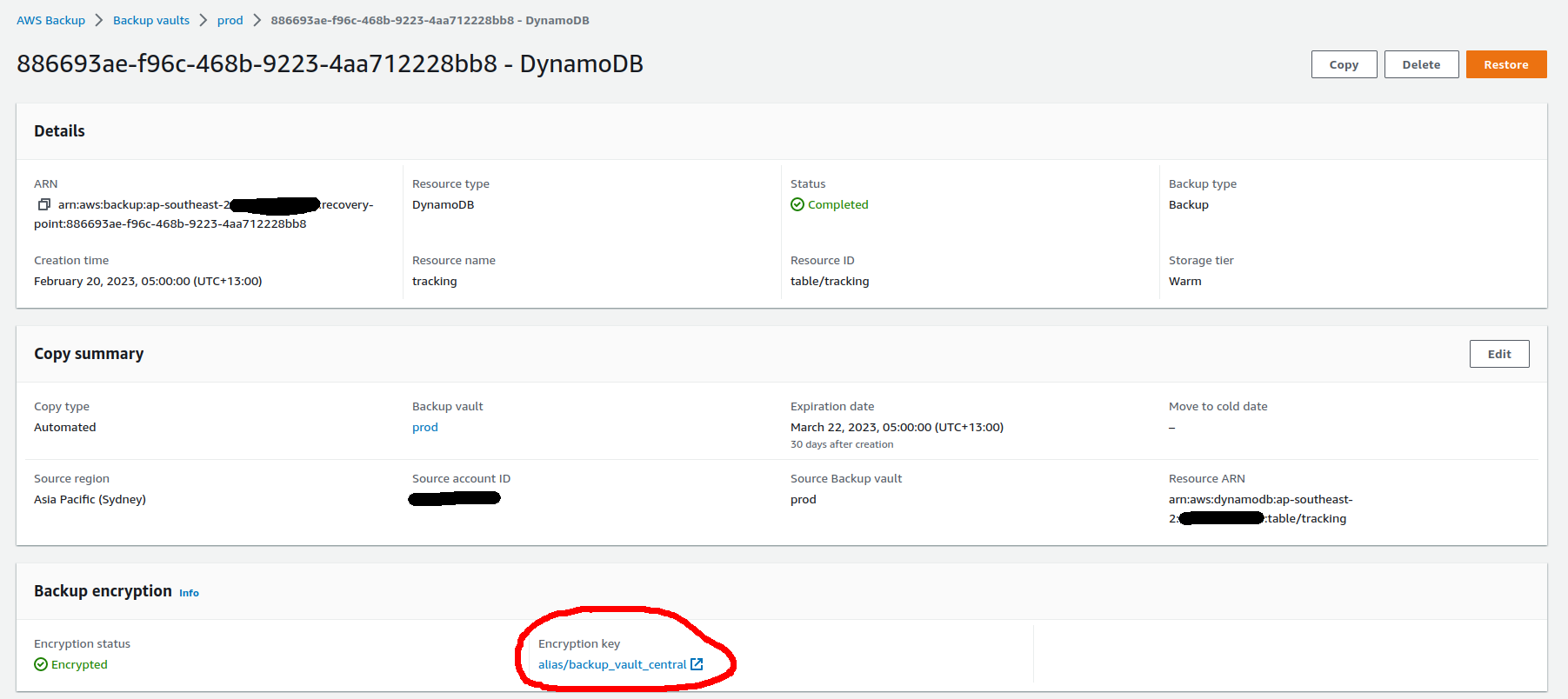

Digging into a recovery point of the DynamoDB table, we can see that the backup itself is encrypted with the vault encryption key, not the key used to encrypt the original resource.

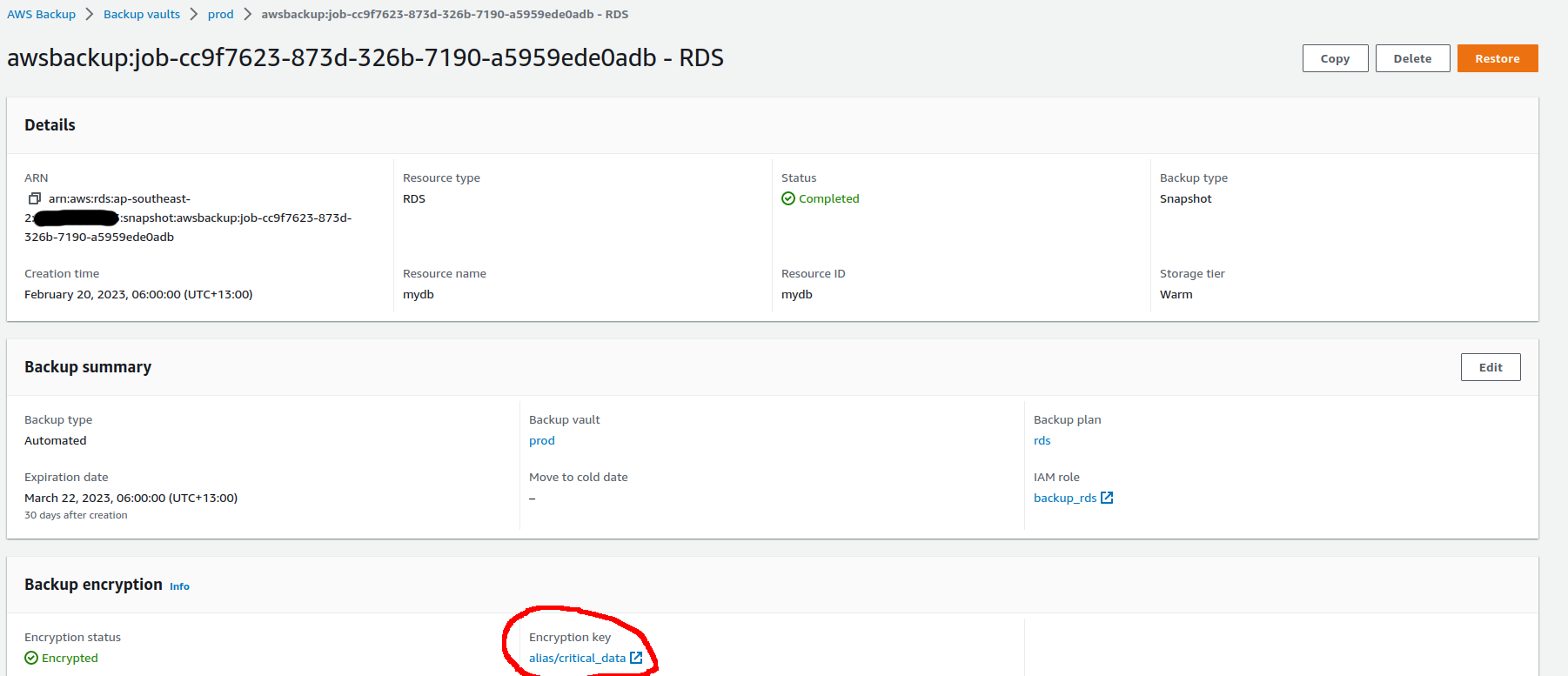

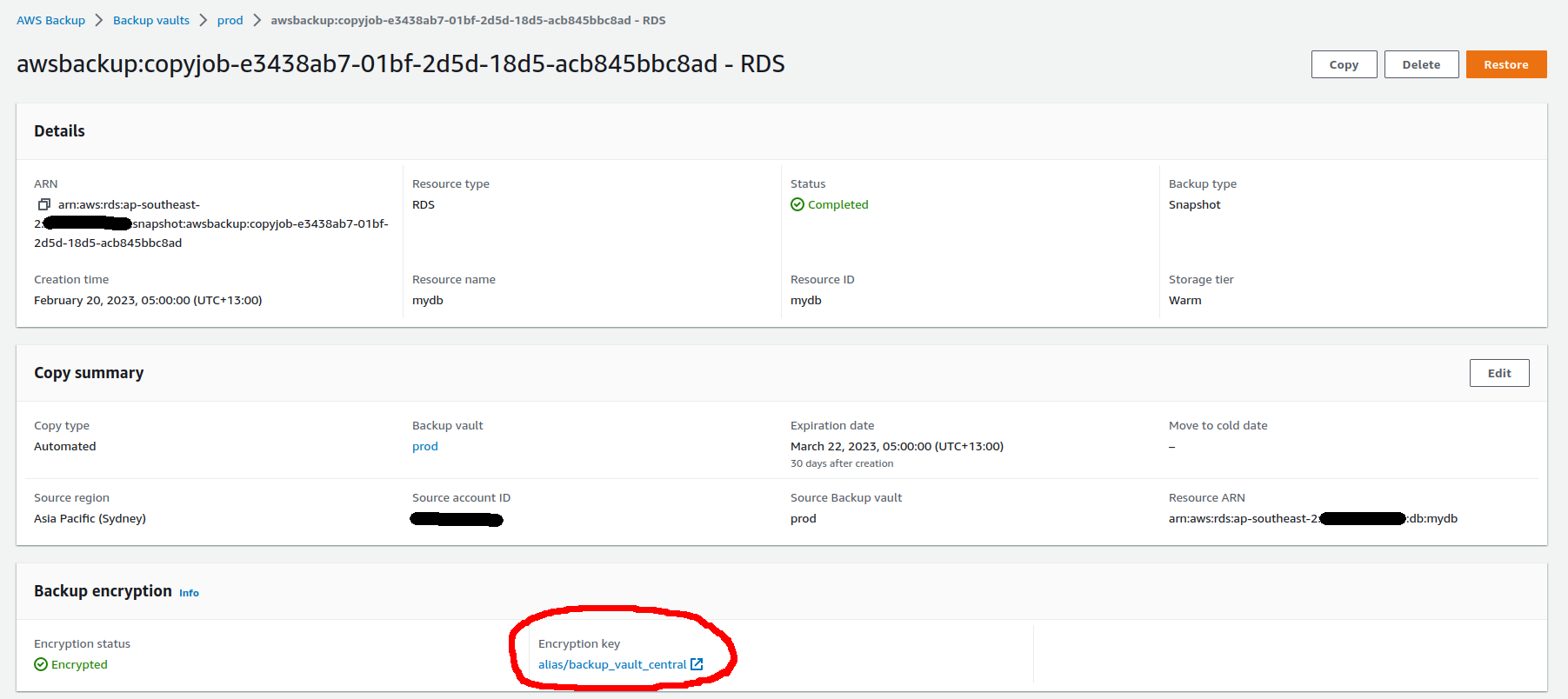

In contrast, the snapshot for the RDS instance is encrypted with the CMK used for the instance itself, not the CMK for the backup vault.

Once the backup job is done for a resource, a copy job starts almost immediately to get it copied into the destination vault. After the copy operation is complete, the destination vault lists the recovery points successfully copied.

The copies of the snapshots are encrypted with the destination vault CMK irrespective of the resource type.

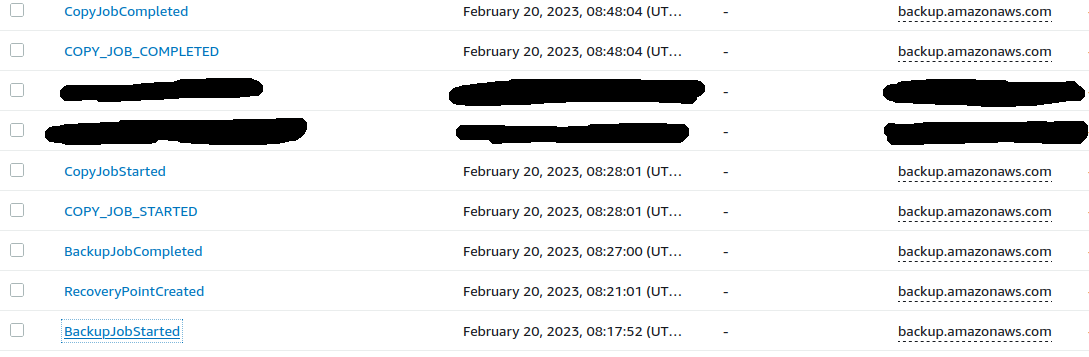

The order of the events being executed by AWS Backup is as follows.

The Terraform code for this setup is at this Github repository.