Some building in Washington DC. It's just pretty, not related to Rust or AWS

AWS re:Invent 2023 announced the GA versions of the Rust and Kotlin SDKs. The SDK was in developer preview for sometime, and this release allows the use of it in production. Following is an introduction to the Rust SDK, its defaults, and a few detailed scenarios that are different from the default cases.

The working code for the following examples are in Github.

If you’re just coming across this post randomly and have no idea what an SDK (Software Development Kit) is, think of it as a library provided by the service provider for the specific programming language, so that you can interact with the service withhout having to write the code for that communication. It’s Boto3 for Python for AWS, but for Rust. The Rust SDK for AWS allows you to just use the SDK without having to write the code that handle API requests, throttling, custom endpoints, authentication, sigv4 and all that glory.

Rust SDK for AWS supports almost all the services and follows the same pattern

as other language SDKs where sensible defaults out of the box help address 80%

of the use cases. SDKs for different services are provided as separate crates.

The SDK requires an async runtime since almost all major tasks done by the SDK

are IO bound and can be improved with async execution. It can work with any

runtime as long as a sleep function is provided by

the runtime. For the following examples, I’m using the same runtime used in the

standard AWS examples, tokio.

The example scenario for working with AWS in the following sections is going to be CloudTrail. We’ll try to

- list the Trails in the given region

- list the

read_only=falsemanagement events in a tabular manner

Presentation part of the above goals (“tabular manner”) isn’t that important for the “AWS” part of the title, but why not make things pretty while we are at it.

Prerequisites

The purpose of this post is to give a somewhat detailed introduction to the Rust AWS SDK. Teaching Rust or AWS are non-goals.

- Rust + Cargo + basic Rust knowledge

- basic AWS knowledge

Let’s create a sample project for this scenario.

cargo new aws-rust-helloworld --bin

cd aws-rust-helloworld

Set up

Before the SDK can be used, the dependencies have to be added to the project. This

involves adding the common aws-config crate, the service specific

aws-sdk-cloudtrail crate (or aws-sdk-<service> for any other service), and

the async runtime tokio.

cargo add aws-config aws-sdk-cloudtrail tokio \

--features aws-config/behavior-version-latest,aws-config/behavior-version-latest,tokio/full

Note that we specify behavior-version-latest feature for both aws-config and

aws-sdk-cloudtrail crates as this would be needed for more customised

scenarios than the default use case. Behavior version is a concept that helps

the SDK to evolve without having to break API when AWS introduces changes to

how API communication is done.

Note that the feature full is also enabled for tokio crate, as otherwise

the async runtime will not be included.

The Cargo.toml entries will look something similar to the following.

[dependencies]

aws-config = { version= "1.1.1", features = ["behavior-version-latest"] }

aws-sdk-cloudtrail = "1.9.0"

tokio = { version = "1", features = ["full"] }

Simple Client Scenario

For the first example, let’s initialise the client with credentials provided through environment variables. Afterwards, let’s invoke the operations needed to get the above two tasks done.

As the SDK operations are async, let’s define the main function as async and

initialise tokio runtime. The return type from this function is going to be

a std::result::Result enum with error type being aws_sdk_cloudtrail::Error,

so it has to be imported to the context.

use aws_sdk_cloudtrail::Error;

#[tokio::main]

async fn main() -> Result<(), Error> {

//...

}

As the first step, let’s initialise the AWS client with configuration provided through environment variables. Environment variables are the same ones you’d be familiar with in any other language SDK or the AWS CLI. For authentication the following have to be provided.

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_REGION

Populate these with the temporary credentials obtained through STS or (less ideally) permanent credentials generated in IAM.

let shared_config = aws_config::load_from_env().await;

The function load_from_env() available in the aws-config crate goes through

the default config lookup cycle (environment variables, config file locations

etc) to determine the effective configuration for the AWS client context and

returns the configuration struct of type aws_config::SdkConfig (to be

precise, it returns a future that when executed will return the struct. All SDK

operations return a future to be awaited on).

The struct creation in the above function load_from_env() configures the

following details among others.

- async runtime sleep function

- region

- credentials

- retry and timeout config

- http client

All the configuration options contain sensible defaults that can be overridden with environment variables, config file entries, and others that are available through the default configuration chain.

How these defaults are configured can be overridden as well. We’ll explore a few use cases for this later in this post.

With this configuration struct, we can now build the specific client we want. For this, we need to import the appropriate Client implementation to the context.

//...

use aws_sdk_cloudtrail::{Client, Error};

//...

#[tokio::main]

async fn main() -> Result<(), Error> {

let shared_config = aws_config::load_from_env().await;

let client = Client::new(&shared_config);

}

With the initialised client, let’s perform the first step of listing the existing Trails in the provided region.

//...

use aws_sdk_cloudtrail::{Client, Error};

//...

#[tokio::main]

async fn main() -> Result<(), Error> {

let shared_config = aws_config::load_from_env().await;

let client = Client::new(&shared_config);

let req = client.list_trails();

let resp = req.send().await?;

for trail in resp.trails() {

println!("{}", trail.name().unwrap());

}

Ok(())

}

Compile and run this snippet in an authenticated AWS context to see the list of Trails in the configured region. Credentials can be set through environment variables.

cargo run

It’s as easy as that. This kind of environment variable based configuration covers most of the use cases. Let’s continue and get the second operation done.

//...

use aws_sdk_cloudtrail::{

types::{LookupAttribute, LookupAttributeKey},

Client, Error,

};

//...

let read_only_attrib = LookupAttribute::builder()

.attribute_key(LookupAttributeKey::ReadOnly)

.attribute_value("false")

.build()

.unwrap();

let lookup_events_req = client.lookup_events().lookup_attributes(read_only_attrib);

let lookup_events_resp = lookup_events_req.send().await?;

//...

The above code is the Rust equivalent of the following CLI command executed in an authenticated AWS context.

aws cloudtrail lookup-events --lookup-attributes AttributeKey=ReadOnly,AttributeValue=false

Note the builder pattern used for building the lookup attribute for the operation. Builder pattern is used throughout the SDK to provide an easy API to override specific details with minimal code duplication.

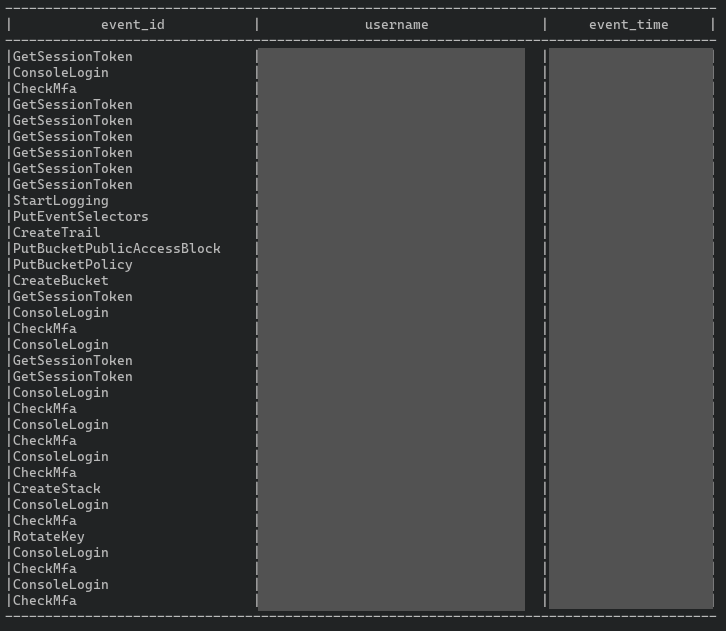

Let’s display the returned results of a successful execution

lookup_events_resp in a tabular manner.

//...

println!("-----------------------------------------------------------------------------------------");

println!("|{:^30}|{:^35}|{:^20}|", "event_id", "username", "event_time");

println!("-----------------------------------------------------------------------------------------");

for event in lookup_events_resp.events() {

let event_name = event.event_name().unwrap();

// some events do not contain a username

let username = if event.username() != None {

event.username().unwrap()

} else {

"-"

};

let event_time = event

.event_time()

.unwrap()

.fmt(DateTimeFormat::DateTime)

.unwrap();

let event_time_formatted = event_time.as_str();

println!("|{:<30}|{:<35}|{:<20}|", event_name, username, event_time_formatted,);

}

println!("-----------------------------------------------------------------------------------------");

//...

}

This is a straightforward piece of code all things considered. The result of

the lookup_events() function is a

builder

on which send() can be invoked to get a LookupEventsOutput

struct. This contains a function called events

that will return a vector of Event objects that we can iterate. The rest of

the code is just formatting the details from an Event struct into the tabular

format.

Simple Config Modification

Above example works with the default configuration, that can be found in the

context and can be loaded from environment variables. However the Rust AWS

ConfigLoader struct provides a builder API that allows overriding these

configuration values at the initialisation if the requirement demands it. These

include options such as region and profile and advanced configuration such as

credentials

providers.

Let’s consider a scenario where the region of the operations should be tied

down to a particular value (ex: data sovereignity requirements involved?). Or

provide a sensible default value in the code itself if the default

configuration chain doesn’t provide a suitable value. In that case, we can go

one step below the function used above load_from_env() and access the

ConfigLoader struct and its functions.

use aws_config::meta::region::RegionProviderChain;

use aws_sdk_cloudtrail::{

Client, Error,

};

#[tokio::main]

async fn main() -> Result<(), Error> {

//...

let shared_config = aws_config::from_env()

// hardcoding the region

.region("ap-southeast-2")

.load()

.await;

let client = Client::new(&shared_config);

//...

}

Or for the second case of providing a fallback value,

use aws_config::meta::region::RegionProviderChain;

use aws_sdk_cloudtrail::{

Client, Error,

};

#[tokio::main]

async fn main() -> Result<(), Error> {

//...

let shared_config = aws_config::from_env()

// hardcoding if the config isn't found in the default loading chain

.region(RegionProviderChain::default_provider().or_else("ap-southeast-2"))

.load()

.await;

let client = Client::new(&shared_config);

//...

}

In the above example, RegionProviderChain::default_provider() still tries to

load a value from the default configuration chain. or_else() makes sure a

fallback value is provided if it fails. This is a good way to work with

sensible defaults that can be overridden.

Other than region, aws_config::meta::credentials::CredentialsProviderChain

provides similar functionality for fallback credentials.

Providing Credentials in Alternative Ways

So far, we’ve been loading credentials from environment variables (or any

other place in the default configuration chain). Credentials in environment

variables is a generally good practice which decouples the code from the

different environments it will go through. It is a generally safe pattern too.

There is less of a chance of you committing the credentials with an

AdministratorAccess policy to a public Github repository to be snatched the

moment it’s available (you shouldn’t be working with AdministratorAccess in

the first place, but let’s leave that for another post).

However there could be minor edge cases where you absolutely have to deviate

from this pattern and manually load the credentials. Or the credentials you’re

looking for live in an external secret store that needs to be queried at

runtime before talking to AWS. In this case, the credential_provider of the

ConfigLoader struct should be overridden before building the SdkConfig

struct.

The structs and the associated functions for providing alternative credential

providers are contained in a different crate named aws-credential-types. This

dependency should be added for the following code snippets to work.

cargo add aws-credential-types

One way of providing credentials is to directly build a Credentials struct using the

function from_keys(). This allows just hardcoding the credentials in the

source code. This function is only available if aws-credential-types is added

with hardcoded-credentials feature.

cargo add aws-credential-types --features hardcoded-credentials

//..

use aws_credential_types::Credentials;

use aws_sdk_cloudtrail::{

types::{LookupAttribute, LookupAttributeKey},

Client, Error,

Config,

};

//..

#[tokio::main]

async fn main() -> Result<(), Error> {

//..

// IMPORTANT: demo only. DO NOT hardcode credentials!!!

let credentials: Credentials = Credentials::from_keys("ACCESS_KEY", "SECRET_KEY", Some("SESSION_TOKEN".to_string()));

let shared_config: Config = Config::builder()

.credentials_provider(credentials)

.region(Region::new("ap-southeast-2"))

.build();

let client: Client = Client::from_conf(config);

//..

}

Note that this example is shown because I can’t make up my mind to say “never”

use it, although there’s about 99.99% chance that you shouldn’t use this

function. Even the documentation for Credentials::from_keys()

mentions that

this function should be dropped in favor of the more secure Credential Provider

approach. So, DO NOT use this example unless a world ending scenario forces

you to do so.

Note that we started using the direct builder method instead of the wrapper

from_env() here. This has a different effect than the from_env() wrapper

where the default configuration chain is looked up. When using the builder()

method, the defaults are what’s written in the SDK, and no looked up in the

default configuration chain.

Writing a Custom Credentials Provider

A better way to implement a custom credential retrieval logic is to write a custom credentials provider. This is done in a few short steps.

- Define the struct that denotes the custom credentials provider

- Implement the logic that retrieves the credentials as an

asyncfunction which returns aaws_credential_types::provider::Result. - Implement the trait

aws_credential_types::provider::ProvideCredentialsfor the struct, which is ultimately a single functionprovide_credentials()which returns a future that returns the credentials.

For this approach, the feature

aws-credential-types/hardcoded-credentialsis not needed.

//...

use aws_credential_types::{

provider::{self, future, ProvideCredentials,},

Credentials,

};

//...

// create the type for the custom credentials provider

#[derive(Debug)]

struct CustomCredentialsProvider;

// implement the async logic to generate/retrieve credentials (ex:) from a secret store

impl CustomCredentialsProvider {

async fn load_credentials(&self) -> provider::Result {

// IMPORTANT: demo only. DO NOT hardcode credentials!!!

Ok(Credentials::new("ACCESS_KEY", "SECRET_KEY", Some("SESSION_TOKEN".to_string()), None, "CustomProvider"))

}

}

// implement ProvideCredentials and return a future for the credentials retrieval logic

impl ProvideCredentials for CustomCredentialsProvider {

fn provide_credentials<'a>(&'a self) -> future::ProvideCredentials<'a> where Self: 'a {

future::ProvideCredentials::new(self.load_credentials())

}

}

Then use this as the Credentials Provider when building the config struct.

//...

use aws_sdk_cloudtrail::{

types::{LookupAttribute, LookupAttributeKey},

Client, Error,

Config,

};

//..

#[tokio::main]

async fn main() -> Result<(), Error> {

//...

let shared_config: Config = Config::builder()

.credentials_provider(CustomCredentialsProvider)

.region(Region::new("ap-southeast-2"))

.build();

let client: Client = Client::from_conf(config);

//...

}

Conclusion

Rust SDK for AWS is generated from the Smithy language definitions for the

AWS API using smithy-rs. So

reading the code itself, in rare cases, could be convoluted. AWS

documentation on the SDK had been a bit behind, especially during the developer

preview phase. However it looks like AWS has been filling the gaps quickly

around the GA release. The perfect starting point is at the landing

page with more details on different

service related crates in each crate’s

documentation.

The working code for the above examples are in Github.