This is an introductory post on the problem of authenticating into AWS from K8s workloads, either from within AWS or from outside of it. I’m going to discuss three major approaches to this problem and compare them against each other. I’ll follow this up with more detailed articles (and videos) on each approach, where I’ll go into details as much as possible.

Problem Statement

The key problem I’m trying to tackle here is how to authenticate into AWS from workloads running in K8s.

The solution could be as easy as hardcoding IAM user credentials in the code/environment variables and calling it a day, to fully automated approaches like EKS Pod Identities. Which one you should take depends on a list of factors.

- Do you care about security? (if not, this is the universe giving you a signal to do so)

- Is your K8s cluster in AWS or outside of it?

- Is your K8s cluster on EKS or outside of it?

- Can you tolerate umbrella IAM policies (opposite of Least Privilege)? (if you can, this is another small signal to not tolerate)

- How comfortable are you with third party solutions?

To define the problem more, the workloads in K8s should be able to authenticate into AWS,

- with short term credentials

- with workload specific IAM permissions

- with an approach that scales well to different types of workloads

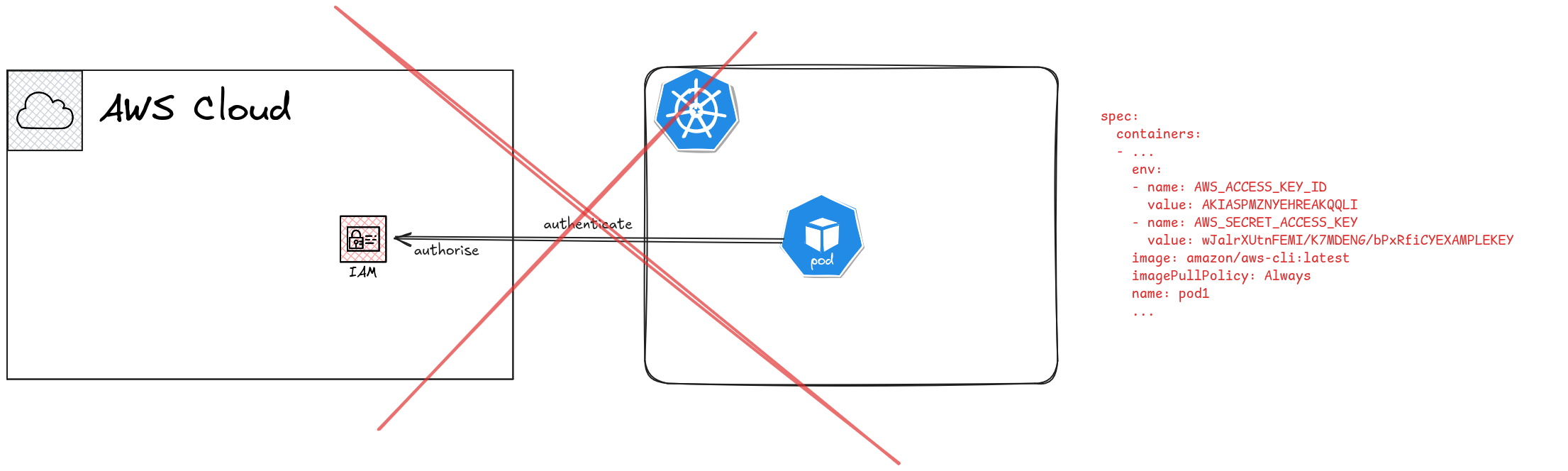

Approach 1: The worst way

The easiest and the worst way to solve this problem would be create an IAM user in AWS, generate long term credentials, and provide the access key and the secret access key to the Pod as environment variables, secrets, or if you’re looking to hit all the points of absolute worst security, as hardcoded values in the code.

spec:

containers:

- ...

env:

- name: AWS_ACCESS_KEY_ID

value: AKIASPMZNYEHREAKQQLI

- name: AWS_SECRET_ACCESS_KEY

value: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

image: amazon/aws-cli:latest

imagePullPolicy: Always

name: pod1

...

It is imperative that you do NOT do this ever, even as a way to test something in 5 mins. It’s not a broken window, it’s building a skycraper on flood plains. Seriously, don’t build a habit of writing credentials in source code.

Credentials have no business being in a git repository, and they shouldn’t ideally be in environment variables as well. K8s Secrets are not really secrets (unless you encrypt your ETCD cluster, even then, it’s not the best of secrets).

In addition to the bad practice, this checks off none of our requirements above. This approach uses long term credentials, which is heavily discouraged by AWS. You could probably follow Principle of Least Privilege here, but if you’re creating IAM users for this purposes, chances are the practices aren’t really incentivising attention to detail like that. And finally, you’ll be creating IAM users for each workload type, which is not really scalable even with something like Infrastructure as Code (IAC) involved.

So if you’re already following this practice, read on, you might find ways to get a better chance at hitting that sweet ISO27001 compliance.

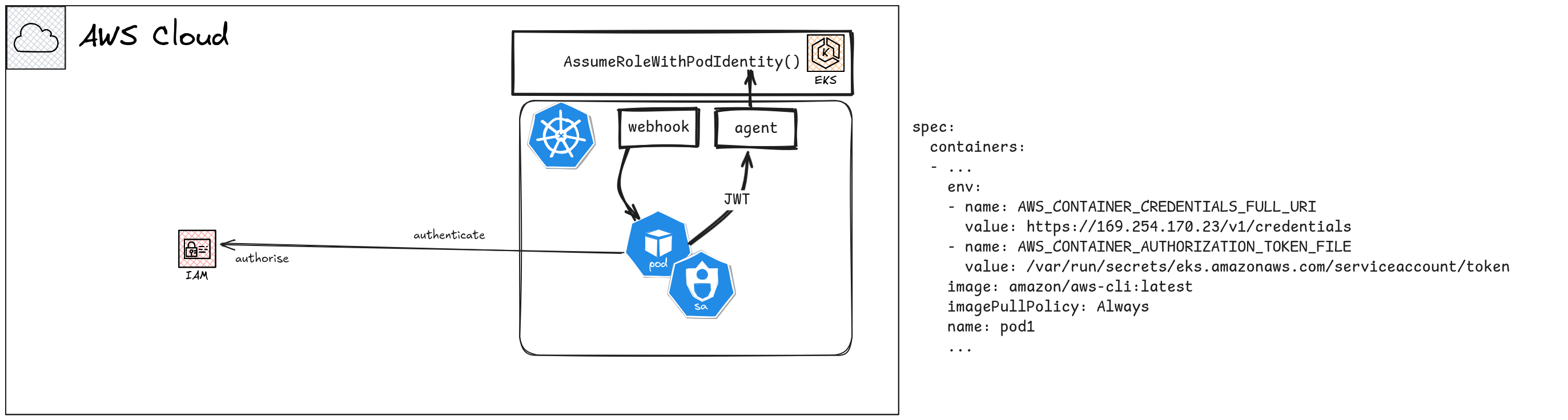

Approach 2: EKS Pod Identities

EKS Pod Identities is a relatively new feature introduced by AWS to solve this specific problem. It maps K8s Service Accounts to IAM Roles, and manages the complexity of providing temporary credentials for the Role in the Pod in the background.

Like the name suggests, EKS Pod Identities can only be used in full EKS clusters. You can’t use Pod Identities in external K8s clusters, even if they are in AWS EC2. You can’t even use Pod Identities in EKS clusters if the workloads are in Fargate, or if they are backed by external compute, such as when you register an external K8s cluster in EKS through EKS Connector. You also can’t use Pod Identities in EKS Anywhere based clusters. This functionality is fully backed by features in AWS control plane, that cannot be easily replicated outside.

The cluster administrator will need to enable Pod Identities plugin, and then map Service Accounts to specific IAM roles in AWS.

The workloads in Pods will be provided necessary environment variables and values to obtain short term credentials through the Pod Identity Agent. The improvements in later AWS SDKs help here as well.

I’ll follow this article up with a detailed article and a video with a deep dive into EKS Pod Identities. I’ll explain the details involved in the Pod Identities process to the most detailed level possible there.

UPDATE: New article and the video is here.

Compared to Approach 1 above, this is light years ahead in terms of security, ease of use, and scalability. You don’t have to worry about long term credentials. You’re not keeping any credentials on K8s side of the puzzle. The end users (in this case, the developers and the application administrators) don’t have care about the authentication details, they just need to write and configure their applications assuming the credentials will be there.

On the cons side, you can only use EKS Pod Identities on full EKS clusters, on workloads running on EKS. You need to make sure you’re using the latest AWS SDK in the application as well.

So if your cluster is not a full EKS cluster, or if your workload has legacy code that you can’t realistically upgrade, then you’re out of luck with this approach.

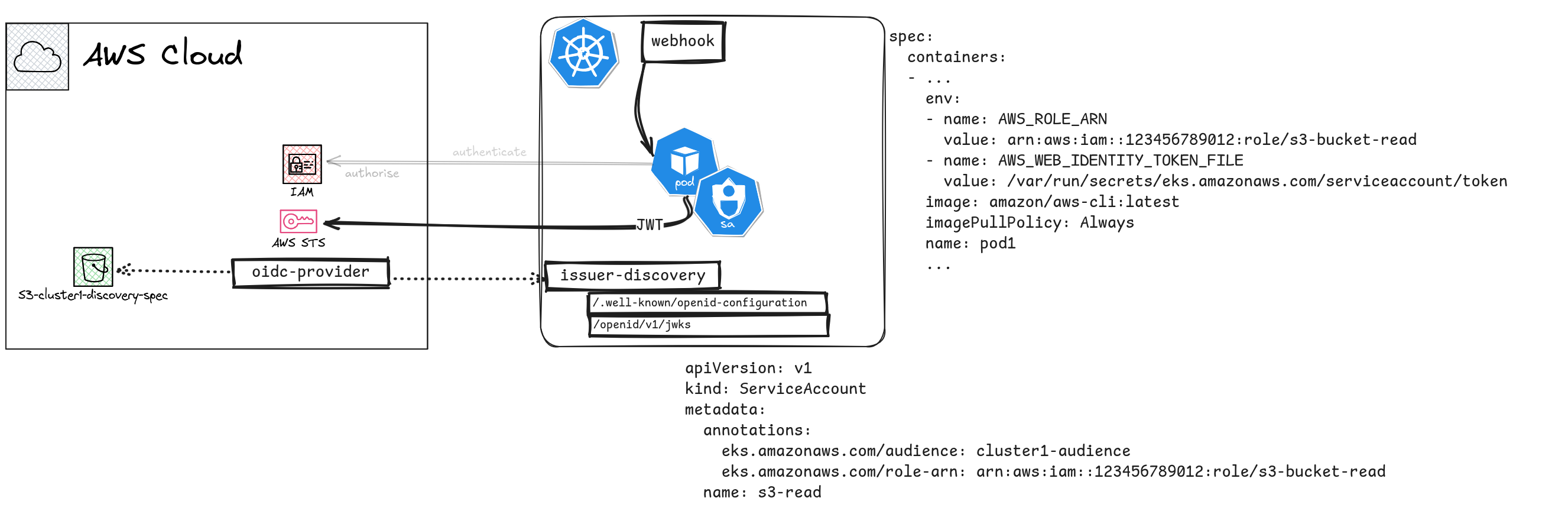

Approach 3: IAM Roles for Service Accounts (IRSA)

The next best approach would be something called IAM Roles for Service Accounts (IRSA). The core idea of IRSA is basically the same as Pod Identities (in fact, IRSA was the recommended approach for EKS before Pod Identities was introduced). The SDK in the Pod will be provided with appropriate environment variables so that it can get temporary credentials to talk to AWS.

However, several other key details are different in IRSA.

The way the SDK gets temporary credentials is different from Pod Identities. In

Pod identities, a new credentials provider method called Container Credentials

is used, where as in IRSA, the old AssumeRoleWithWebIdentity is used. The

“web identity” in this case is established by using the OpenID Connect (OIDC)

issuer functionality in K8s as a federated identity provider in AWS IAM. So, to

keep the details brief, the Pods that have a Service Account token (through the

TokenRequest API) can authenticate as federated IAM users and assume roles,

because AWS can verify the token through the OIDC endpoint in K8s.

As the Pod Identities, expecte a detailed article and a video on IRSA in the future.

IRSA are notoriously a PITA to set up. For each Cluster you have to setup an IAM identity provider. If you cluster control plane changes for some reason, you have to redo the steps, if the OIDC token signing keys (JWKS) change.

However, you can use IRSA with almost any kind of K8s cluster, that needs to access AWS. Whether the cluster is in or outside of AWS is irrelevant, as long as IAM can verify the JWT provided by the workload as an authentication token. So this makes it attractive to workloads that run on isolated compute but still needs partial access to AWS.

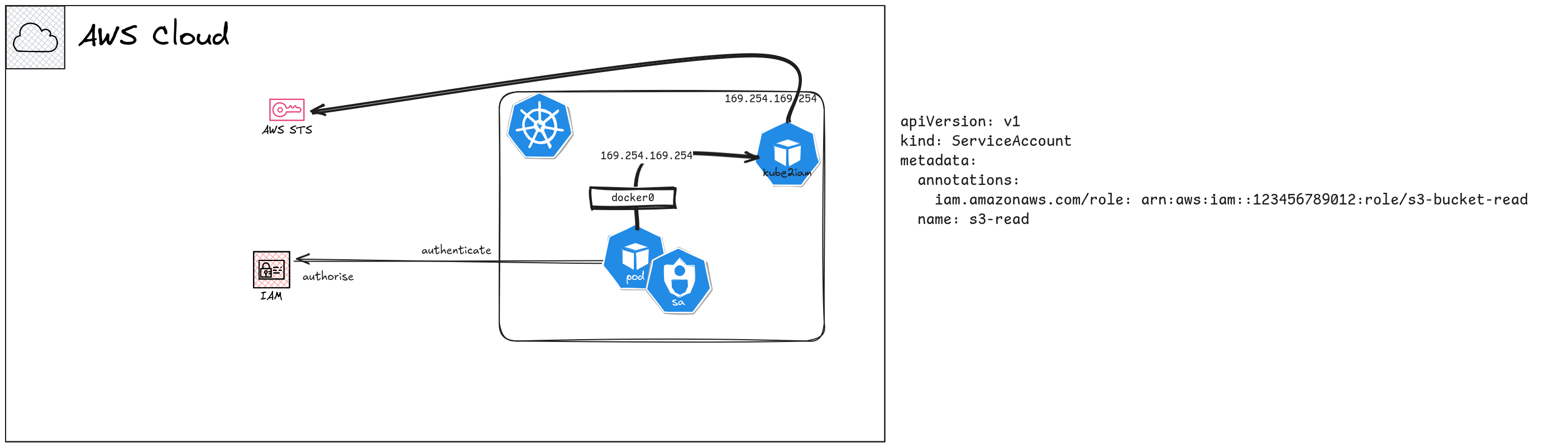

Approach 4: External Tools

Other than the above two “built-in” approaches, there are external tool based

approaches we can take to solve this problem. There are tools like kube2iam

that act as credential proxies. The basic idea for most of these tools is

something like this.

When the AWS SDK inside a Pod tries to make an API call, it will eventually try

to get credentials from the Instance Metadata Service (IMDS). Tools like

kube2iam intercept this call, based on a mapping on the Pod definition

(ex: Service Account annotation) will try to assume different IAM Roles for

different Pods, then return the resulting credentials to the Pod. This is kind

of like a credentials server (used in the EKS Pod Identities approach in the

background), but as far as the SDK on the Pod is concerned, it received the

credentials from IMDSv2.

In approaches like these, you’ll be setting up trust policies on the target roles to allow the Instance Profile to assume them. This could in rare cases, allow untrusted worloads to assume Roles that were not intended for them, but if you have good defense in depth (Service Account management, node isolation etc), this should not be a problem. Also, since these are third party tools, you’ll have to depend on their community or a third party service provider for support. The above two approaches will have full support from AWS.

You can use these third party tools in clusters outside of AWS too. However, you’ll need to setup trust between the compute layer and AWS in some way, using something like IAM Roles Anywhere. In that case, the trust relationships of the target Roles should trust the Role associated with the IAM Roles Anywhere Trust Anchor.

Conclusion?

Well, not exactly. I’m planning to do follow up videos and articles on each of these approaches after this. There are some interesting details about each that I can’t really cover in a generic article like this. So expect more in this space!!