Tea app getting hacked is an interesting case.

It’s not technically a hack. No existing security measures were breached, there wasn’t any complicated social engineering, or zero-days or unpatched CVEs getting exploited. What happened is what’s called a Data Extraction. Data that should have been private was public, and naturally, people extracted it, twice!!

Typically, when any solution worth its salt goes into production (i.e. subjected to government oversight, has some regulatory pressure, or has some critical impact on a business), it gets a risk evaluation, and Data Extraction is a key risk that gets focus. For an example, it’s usually why you don’t get to use production data in test environments (however much the developers complain).

But it turns out, no one at Tea ever thought of risk. There’s even some rumours that say the whole app was vibe coded, which I’m not sure is 100% correct. But it is pretty clear that people in charge over there had no experience or qualifications to handle data as sensitive as the ones they requested from the users.

The first hack happened because of a storage bucket that had public access enabled. I couldn’t find exactly why the second hack happened, but it looks like the database was also publicly accessible.

So as someone who’s obsessed with software and cloud architecture, I was flabbergasted (no way I’m saying this in the video) on how people keep making this mistake. Even a brief study of basic cloud architecture concepts grills you on why public access for resources that contain private data is a very bad idea. You don’t have to be a genius to understand that just because a bucket name is randomised does not mean it’s secure. You don’t randomise the location of your windows and keep them open, hoping the thieves won’t check every one of them.

So, I kinda wanted to reproduce what happened at Tea. Although I don’t know exactly what went down, I’m going to make some assumptions.

- Some level of “vibe-coding” happened (undesigned code and systems)

- data was publicly available, either iterated or mass downloaded (it actually looks like there was a public descriptor file or payload that contained URLs of the files)

- Minimal security reviews were done (I can’t believe NO security review was done, no one could be that stupid, right?… right?)

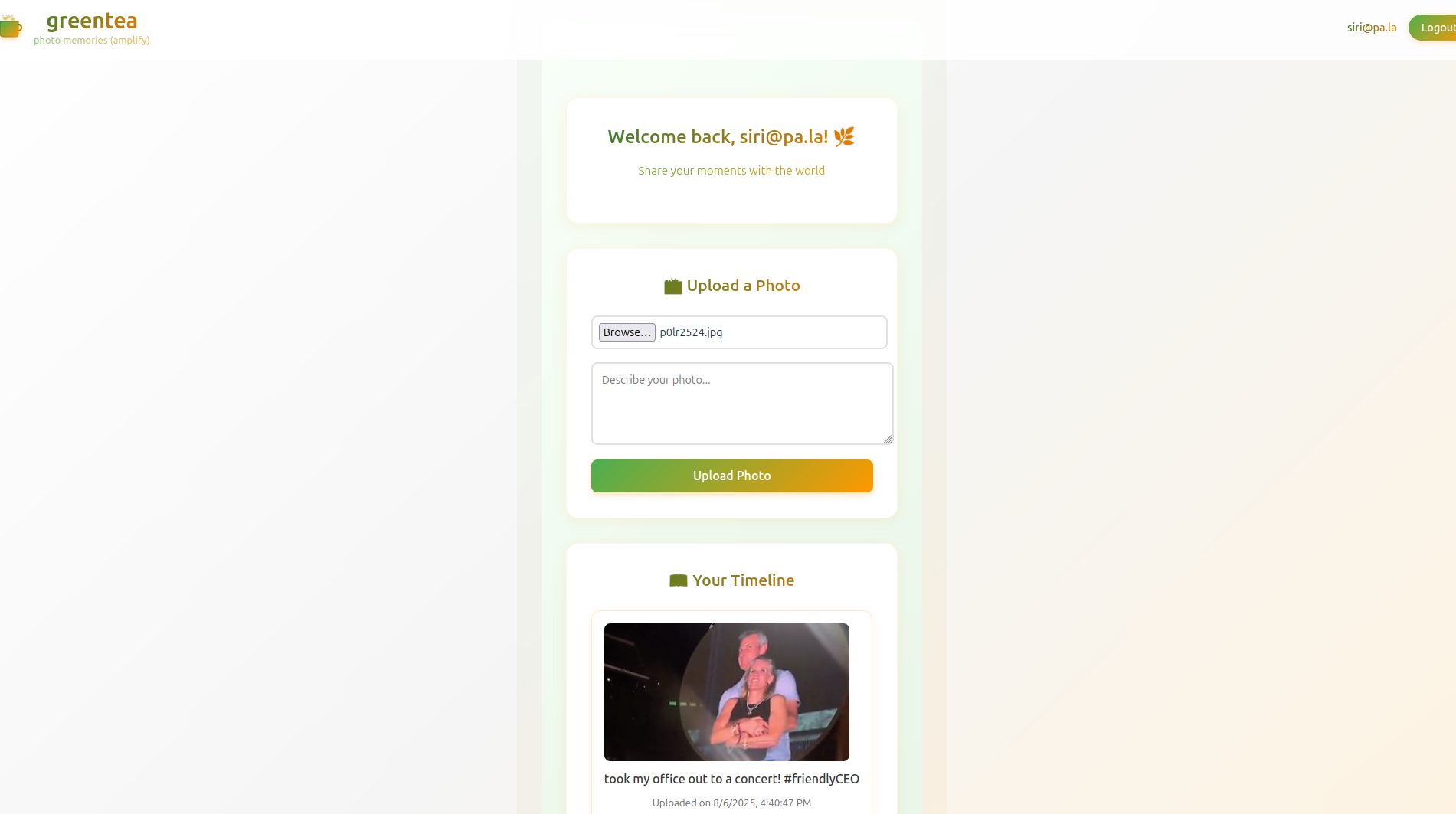

So I went ahead and vibe coded a simple web app. It’s a bare bones, login, upload photos, and view them back workflow.

The code is available at my Github profile

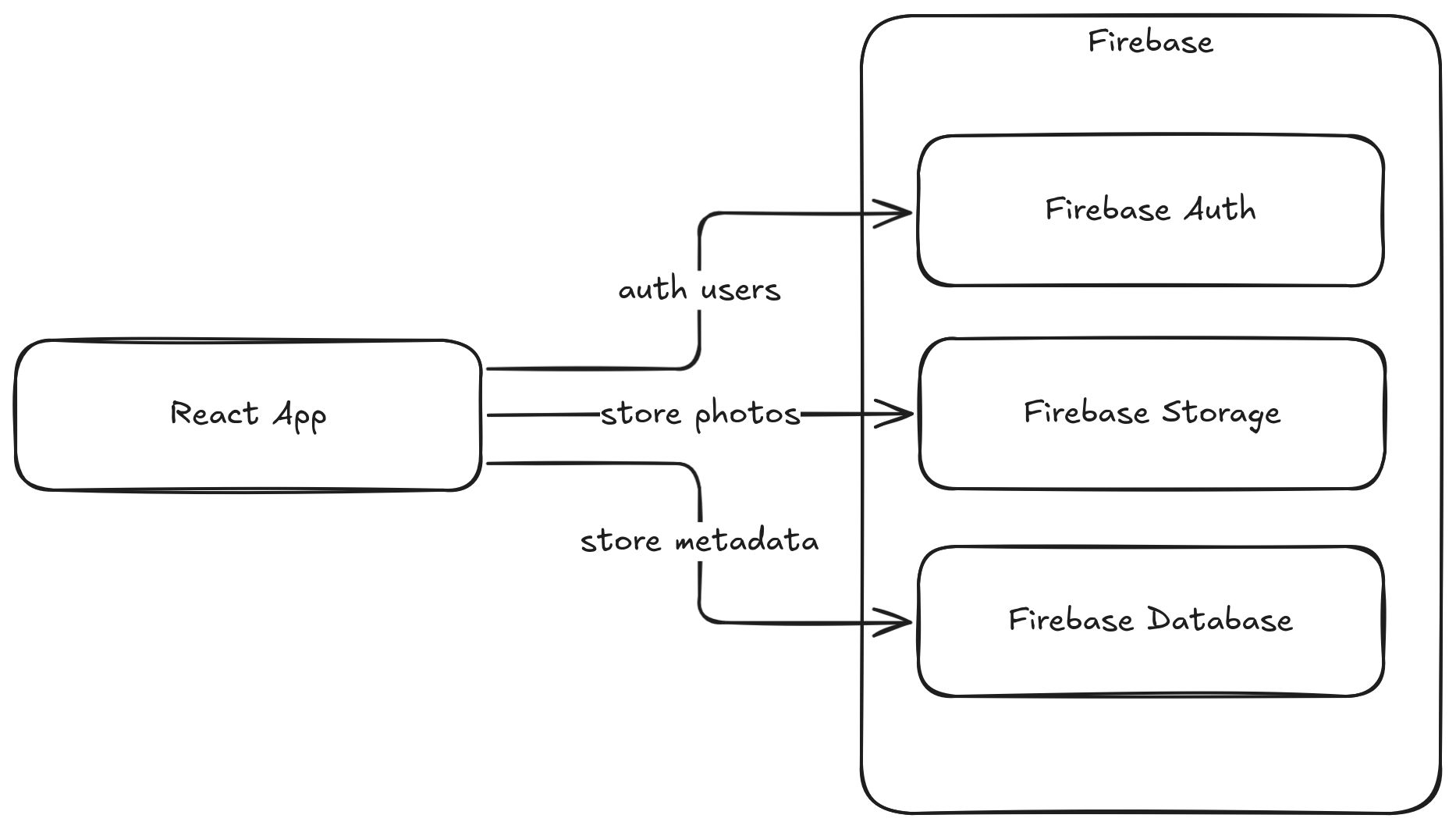

The prompt I provided is something along the lines of “make a web app that a user can login and upload photos. They should be able to view the photos they uploaded. Use firebase as the backend. Vite+React as the frontend”

Tea used Firebase as the backend, and looks like both the database and the storage bucket was open for public access.

I’m emphasising the fact that I “vibe-coded” this, because I want to show that there was no explicit software or cloud architecture design before the application. Just a couple of business requirements along with one technical directive to use Firebase and vite+react.

What ended up getting generated was something like this.

The model(s) pretty much got the code right in the first run (except for a couple of manual fixes), and I was soon posting images to my own gossip site. I was even provided really straightforward information on how to set access rules on the Storage Bucket and the Database.

service firebase.storage {

match /b/{bucket}/o {

match /photos/{userId}/{allPaths=**} {

allow read, write: if request.auth != null && request.auth.uid == userId;

}

}

}

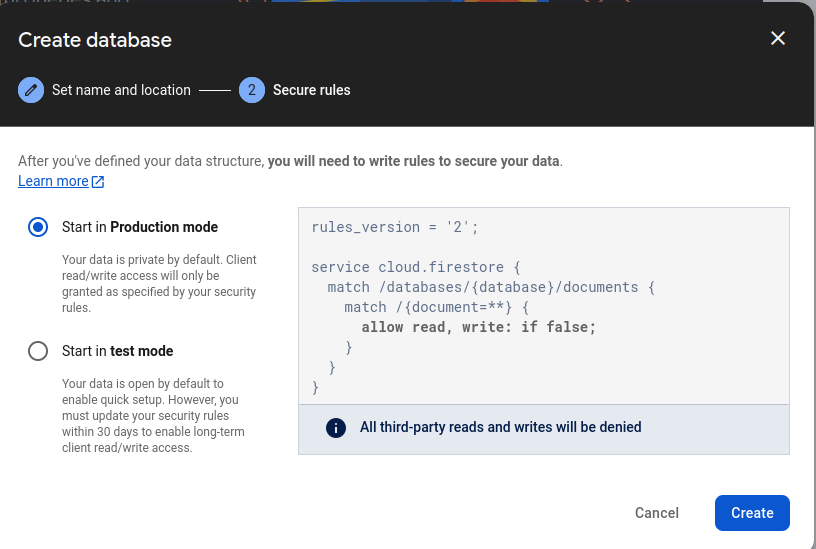

In fact, when I was creating the Storage Bucket and the Database, Firebase itself locks down the resources with private-by-default rules.

You really can’t go wrong with all the signals being sent your way. DON’T OPEN THIS UP!

Now I did notice that the code that was generated in the first run used

getDownloadURL() to generate an authenticated temporary URL for a given

storage object. This returns a URL that contains an opaque security token,

which can be used by an unauthenticated user. This is similar to Signed URLs in

S3 where the credentials that generate the URL are “contained” in the URL

itself.

Yes, yes, I know it’s not the credentials themselves in the URL, out of scope for now.

So was that how the data got leaked? That can’t be, because you need to be authenticated already to generate this URL, and then you need to iterate through every user login to get all the files.

But I wanted to plug that hole (I think it can be argued that this is

not a hole per se, but let’s be secure anyways), so I switched to (or asked the

model to switch to) using getBlob() or getBytes() functions that directly

downloads the file, instead of generating a URL for the browser to load.

There, a reasonably secure web app. Not so hard now, was it?

Okay, if someone competent points Burp Suite at this, there’s bound to be some pretty horrific findings, but let’s go with the assumption that minimal security reviews were done. Someone could’ve actually looked at the Network tab in the browser dev console.

Well, I was still not sure how a mind-bogglingly stupid mistake like keeping a Firebase Storage bucket public happened. I was careless, did minimal security review, and was still able to focus on potential issues, all within a couple of hours of vibe coding, reading documentation, and just general software engineering practice.

Okay, again, granted, this “experiment” is flawed from the start, can’t get rid of the bias against making the specific mistake, but I’m not trying to be unbiased, I’m trying to prove a point.

See, the general security practices are not bound to any Cloud provider implementation. You don’t have to be a GCP/Firebase expert to understand that data classified as PII should not be available on a public bucket. In fact, the people who usually check for these kinds of gaps, information security auditors, tend to be less technical than regular softwre/cloud engineers. If anyone with an inkling of software security risks had one meeting with an engineer with a structured set of controls (ISO27001, CPRA anything!) to consider the risks the company faces with their engineering practices, this situation could have been avoided. It looks like the founders are based in California, so during funding no one who gave out their money did due diligence?

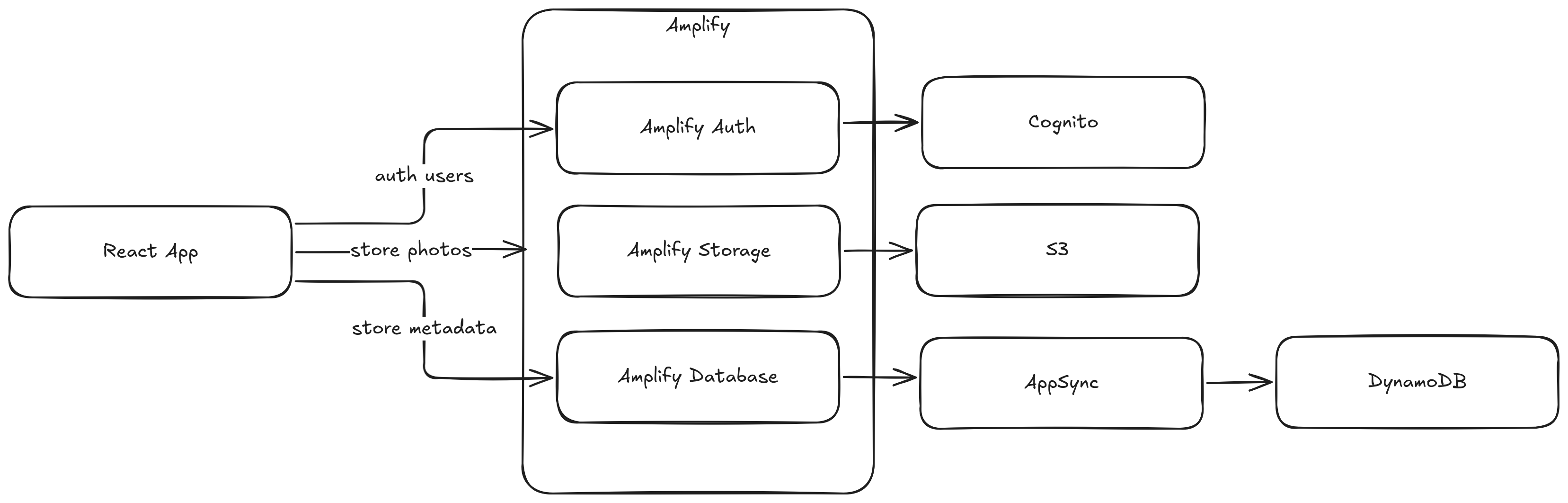

I wanted to prove my point more. It doesn’t matter which tech stack you use, security is security! I vibe coded the same app using AWS services, S3, Amplify, DynamoDB, AppSync, and Cognito.

Amplify Storage getUrl() function is similar to getDownloadURL() function

in Firebase, and generates a short lived S3 Signed URL to access the photos. I

didn’t bother locking down access to photos to each user, because that point is

already driven into the wall. Just a matter of fixing the Bucket policy.

There’s some Cognito Identity Pool stuff happening that I would’ve handled in the software, but for a vibe-coded app, this is still enough security to tick off most standard risks and controls.

So what did we learn?

First lesson is that security is not technical. You don’t have to be someone who codes for a living to understand that PII in a public bucket is a very bad idea. In fact, I’d even say, most coders are ill-equipped to understand this fact.

Taking even a stricter position, I’d say any public storage bucket is a bad idea from its inception. Unless the data you’re going to put in that bucket should be available for any person on earth, you should not make a bucket publicly accessible. If you’ve thought maybe I should make the bucket public for this, you’ve already fucked up. Accept the fact, reverse, and fix course.

AI is not going to fix stupid, no matter how many agents you put in charge with a “spec” in hand. Hire an architect, control the overeager product manager, and focus a bit on the legal and regulatory pressures your business faces.

In fact, it’s really hard to make a blunder like this in regular software engineering practices. The app I built is really simple, but for a more complex application there’s bound to be “a couple of more passes” over this part of the architecture. So this decision to make the bucket and the database public has to be a deliberate decision, which means, some dumbass out there thought they’d keep these resources public without the hackers finding out.

I know the words I’m using are a bit stronger than usual, but when you make photos with EXIF data from your users available for EVERYONE to download, some stern discussion is needed.

So remember kids, hire a good cloud architect and avoid potential jail time.

That’s it, no outro.