A loading tunnel in Wrights Hill Fortress

I made a thing (this time more niche than the last project).

I’ve been using Pi-hole as a DNS ad-blocker and an overall local DNS solution for about two years now. I lived dangerously for most of this time with only a single old Raspberry Pi 2 with Pi-hole in it as the sole DNS server for the local network.

That changed recently when I added another couple of Docker containers as Pi-hole servers to the network.

I quickly found that maintaining configuration between these multiple Pi-holes was becoming a pain. I’ve been introducing a lot of local services recently, and each new local DNS entry had to be replicated manually to the other instances. This would be a manual export, manual copy, and a manual import, repeated on each node.

The first thing I did was to see if Pi-hole CLI supports these two operations. While it does support creating a new backup through the CLI, restoring that backup with the CLI was strangely not an option. The next natural step is to write a tool myself.

Introducing pihole_restore

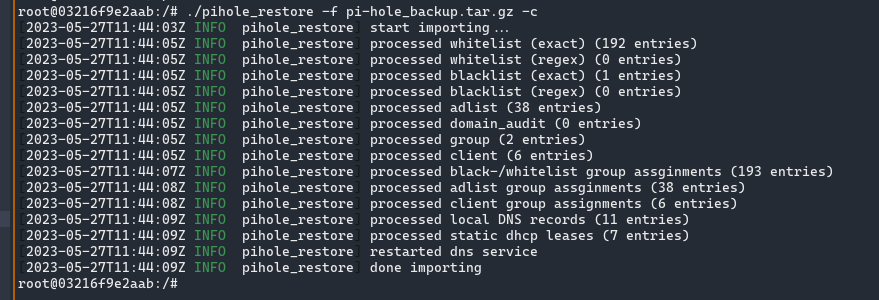

pihole_restore is a CLI tool

to import a pi-hole backup archive file to a running pi-hole instance. It is a

pretty straight forward task, so nothing more to elaborate here.

pihole_restore -f pihole-backup.tar.gz

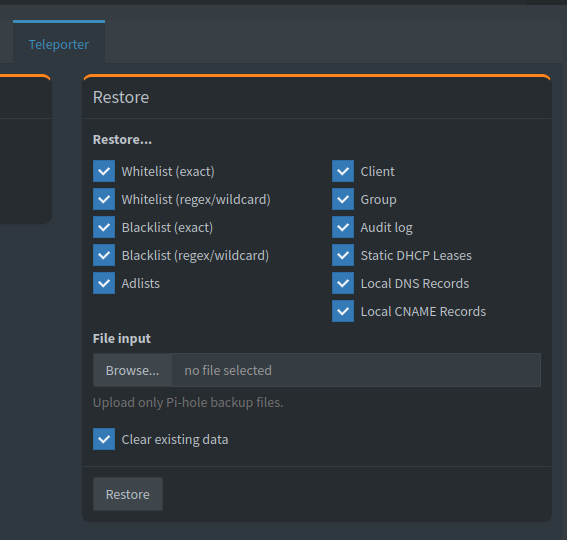

It also allows you to selectively restore only a few configurations, such as DNS entries, adlists etc. I’ve essentially duplicated the functionality in the Web UI into a CLI.

The CLI shippped with Pi-hole distribution is a Bash wrapper around the PHP

scripts for the Web UI for the most part. This is especially true for the admin

sub-command (pihole -a), where it just calls the PHP script directly as

needed.

# the cli command

pihole -a teleporter

# translates into

php "${webroot}/admin/scripts/pi-hole/php/teleporter.php"

This is why creating a backup archive is possible through the CLI and not

restoring it. There’s only one PHP script for both of these functions,

teleporter.php. Only the backup code branch can be directly invoked through

the php interpreter. The restore functionality is behind a decision based on

a request variable (which is established by the server when the Web UI form is

submitted).

if (isset($_POST['action'])) {

// import configuration

} else {

// backup configuration

}

I first tried to see if I can wrangle

phpto somehow inject variables into the$_REQUESTobject. It’s been 12 years since I last touched PHP, but the PTSD kicked in pretty soon.

After reading this part of the code base and finding the related scripts, it was a straightforward task to write a CLI tool. So I decided to complicate things and try to learn Rust while doing so.

Learning a new programming language is fun. It’s even more fun when you actually write something useful while learning it. I learnt Go while writing another side project which didn’t see the light of the day (a Docker registry UI for the Docker registry of the company I worked for at the time). No reason that magic can’t be replicated.

Learning Rust was a challenge indeed. The last language I learnt was Type Script (and I guess ECMA16), and Rust is… a bit different (fun times with borrowing).

One of the best perks about being a late adapter is having the tool chains and the eco system already well established. It was fairly easy for me to learn the tools and start writing code, and learn common practices along the way.

Usage

Now that I’m coming down from the high of finishing a project, let’s discuss more about the potential usage of this binary.

What I do is to use it along side syncthing and perform the task of creating a backup as a manual step. While I would still keep on introducing more changes to my local setup, for the time being I want to have some control over when the changes are propagated. This would probably change to a cron job in the future.

When I’d perform a change in the “primary” Pi-hole server, I perform a pihole -a teleporter on it. This produces the backup archive I need. I have

syncthing synching this directory across to the two secondary Pi-hole

servers. This usually happens within the minute.

On the secondary nodes, I have a systemd service that keeps watching the

target directories for incoming files (technically, for inotify moved_to

events, since syncthing first starts copying contents to a .tmp file and

then renames it). Once a file is detected, it runs pihole_restore with the

new file as the input. systemd-journal logs help me debug if things don’t go

according to plan.

inotifywait -q -m -e moved_to --format '%f' "${WATCH_DIR}" | while read f; do

if [[ $f == *.tar.gz ]]; then

RUST_LOG=debug /usr/bin/pihole-restore -f ${WATCH_DIR}/${f} -c

else

echo "not using this file: ${f}"

fi

done

There were only a few assumptions made during development of this tool, so it can work with almost any setup you can think of, as long as the workflow is through the CLI.

Learnings

Reading pi-hole code was fun. There were few past decisions that I could not derive from the code itself, that the friendly community was able to help with. It was also good to understand the Gravity database structure, especially as a product I’m going to have to maintain.

One of the best outcomes for me personally was getting comfortable enough with Rust to start using it for other projects that would come along. Hopefully, ones that I’d do a better job at maintaining.

Cross-compiling the code for ARM architecture was interesting. I’ll try and write a small article on it in the future.

A somewhat challenging task would be keep the project up to date with the changes rolled out with pi-hole future releases. I’m just going to have to keep an eye on the release notes for now.