Dead (not a professional opinion) Pine tree on the coastal California

Following up from the last post let’s explore how to use Rust in Lambda functions.

AWS has introduced a neat cargo command to make life easier and focus on

building the business logic rather than get bogged down in deployment details.

This is the cargo lambda

command. Installing

the command is pretty straightforward on a linux environment.

pip3 install cargo-lambda

One detail to note is that cargo lambda command uses zig toolchain to

facilitate cross compiling the Rust code. This is important because Rust in

Lambda runs on the OS only runtimes which run on Amazon Linux

versions.

So, unless you’re developing on a Linux workstation, chances are you’d probably

be cross compiling your code. zig toolchain is used because it comes built in

with the cargo-zigbuild crate. More information on cross-compiling and known

issues can be found on the

documentation.

With that detail aside (and wondering why you should install zig for a Rust

project (which you don’t have to manually do if you use pip to install the

cargo lambda command)), let’s dive into the code.

Prerequisites

- Rust + basic knowledge on Rust

cargo lambdacommand- AWS access

Creating a Lambda Function

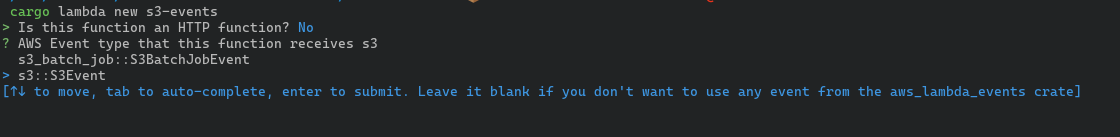

Like we use cargo new to create a new Rust project, cargo lambda new

command can be used to create a new Rust Lambda project.

cargo lambda new lambda-hello-world

This requires a couple of questions that would then generate the boilerplate code needed for a bare minimum Lambda function. The first question is about how the function is triggered. If the function is triggered by an AWS service related event, the second question will be to select the service that will trigger the Lambda function.

For either type, the skeletal code that is generated consists of the following structure.

- async main function that enables tracing and invokes the function handler

- the function handler code that gets passed the incoming event

This is similar to what the other supported languages (like with managed runtimes such as Python) but without the main function entrypoint. Since Rust on Lambda runs on the OS only runtimes, the main function entrypoint is required.

A detail of interest is the use of the tracing_subscriber which enables

outputting trace information from the code. Lambda function execution runtime

gathers the output from stdout and tracing_subscriber to CloudWatch logs.

Furthermore, tracing_subscriber’s instrument feature can be used to inject

the request ID for each log line generated this way to make debugging easier.

#[tokio::main]

async fn main() -> Result<(), Error> {

tracing_subscriber::fmt()

.with_max_level(tracing::Level::INFO)

// disable printing the name of the module in every log line.

.with_target(false)

// disabling time is handy because CloudWatch will add the ingestion time.

.without_time()

.init();

run(service_fn(function_handler)).await

}

More information on the Lambda function logging for Rust can be found in the documentation.

For HTTP requests, the type of the struct passed to the function handler

function is lambda_http::Request. The crate lambda_http provides the type

definitions for the HTTP functions. You’ll notice that this is already in

Cargo.toml along with lambda_runtime. This is what is made convenient by

the cargo lambda command, and it is just getting started.

For Event trigger based functions, the type definitions are provided by

aws_lambda_events crate (with somewhat inconsistent naming convention as I

noticed). This dependency is added if the type of the function is selected to

be an Event trigger (answered with n for the first question during cargo lambda new command). Unlike the code generated for an HTTP triggered function,

the function handler in an AWS service Event triggered function will have the

specific service Event type as the input parameter type. For an example, for a

function that is triggered by S3 Events, the type of the parameter is

aws_lambda_events::events::s3::S3Event. Note that cargo lambda new adds the

aws_lambda_events dependency with the specific service feature only. So if

your Lambda function code deals with multiple services and needs to use those

type definitions, you’ll have to manually add the features for the additional

services through

cargo add command.

async fn function_handler(event: LambdaEvent<S3Event>) -> Result<(), Error> {

// Extract some useful information from the request

Ok(())

}

Each Event type’s documentation can help on understading the fields available after deserialisation.

Watch and Invoke Commands

The true beauty of cargo lambda starts after the code generation part. cargo lambda watch and cargo lambda invoke commands help iteratively develop and

test the Lambda function code with minimal round trip time for a test cycle.

While at a certain point, the function will have to be uploaded and run on

actual AWS environment (or if you have something like localstack, there),

initial development rounds can benefit a lot from a hot reload npm start like

process.

cargo lambda watch keeps watching the source code files and recompiles and

runs the function code. For HTTP triggered functions this makes the function

available on http://localhost:9000. For Event triggered lambda functions, the

command cargo lambda invoke can be used. This can use the sample request

repository

that contains sample JSON requests for most of the services supported by Lambda

functions as triggeres. For an example, for a Lambda function that is triggered

by an S3 Event, the following command can be used to test function trigger.

cargo lambda invoke --data-example s3-event

The flag --data-example refers to the file name without the prefix example-

in the lambda-events directory in the aws-lambda-rust-runtime

repository.

If this sample request needs to be customised, it can be downloaded,

customised, and used with the --data-file

flag.

Deploying the Function

After a few rounds of iterative development you’d finally want to deploy the

function to an actual AWS account. For this the easiest way is to use cargo lambda build and cargo lambda deploy commands together.

cargo lambda build compiles (or cross-compiles if you’re in a non Linux

environment) the function code and builds the bootstrap binary file. It can be

used to build an optimised binary with minimal file size and optimised for the

Lambda runtime by using the --release flag. Additionally, it can also

cross-compile for target architecture arm64, the result of which can be run on

Lambda Graviton2 processors, enabling more price performance on top of what you

would already get by using Rust (instead of the obvious underdog, Java).

cargo lambda invoke is used to create/update the function with the code built

by the above build command. Something to note about this command is the use

of appropriate AWS credentials. When deploying as a new function, cargo lambda

will create the IAM role to be used as the function execution role. If your

credentials cannot do this for some reason (lack of permissions or MFA), the

invoke command will fail to deploy the function. If your credentials have the

necessary permissions but still fail at the above command, check that

- the credentials are not generated at AWS IAM Identity Center (previously known as AWS SSO - which was a perfectly suitable name). Rust SDK does not still support credentials generated by AWS SSO.

- credentials aren’t temporary ones generated by STS without an MFA. STS credentials generated without an MFA cannot perform IAM API operations.

Also note that the invoke command does not create the peripheral

configuration such as the actual trigger for the function. Those details still

have to be created, either manually or by the IaC run you’ll probably execute.

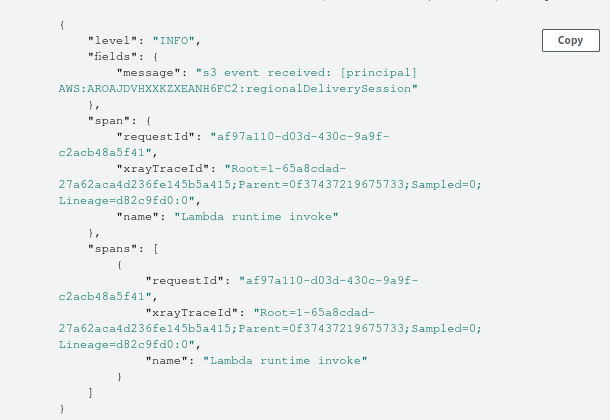

With the tracing_subscriber modified to output structured JSON logging, and

with a trace log added with info level, the following line can be seen in

CloudWatch logs when the trigger is appropriately configured and the function

executed.

#[tokio::main]

async fn main() -> Result<(), Error> {

tracing_subscriber::fmt()

// setting structured JSON output

.json()

.with_max_level(tracing::Level::INFO)

// disable printing the name of the module in every log line.

.with_target(false)

// disabling time is handy because CloudWatch will add the ingestion time.

.without_time()

.init();

run(service_fn(function_handler)).await

}

async fn function_handler(event: LambdaEvent<S3Event>) -> Result<(), Error> {

// Extract some useful information from the request

tracing::info!("s3 event received: [principal] {}", event.payload.records[0].principal_id.principal_id.as_ref().unwrap());

Ok(())

}

More configuration options for the function such as the environment variables

and resources can be configured either through the command flags or entries in

the Cargo.toml file under the section

package.metadata.lambda.deploy.

With the configuration in Cargo.toml, and with additional support from the

AWS construct for Rust for

Lambda, CDK can be used to

deploy and manage the state for the Lambda function.

Conclusion

There are a lot of discussions around the runtime efficiency of Rust compared to managed runtimes or other contendors when running Lambda functions. Whatever the reasons, the toolkit provided by AWS provides a comfortable bridge for getting started with Rust for Lambda functions.

The same toolkit can also be used to develop Lambda function extensions , although this is out of scope for this post for now.