Bixby Creek Bridge on CA-01 highway, Monterey, California

This is the first part of a series of posts on the topic.

Last year I had an opportunity to work with a team of Data Scientists on developing an Enterprise AI/ML practice. As someone who had not interacted at development level with Machine Learning and AI before, I jumped on the chance to learn something new and find a challenge on a new area. The following are a couple of notes (more to myself) of key points I learnt as an engineer with only software development and DevOps experience.

The aim of these articles is to give an introduction to Machine Learning practices and MLOps from a software development and Cloud point of view. Most concepts I mention here would not go too deep into the topics. If you are a DevOps engineer who is looking to play the ML Engineer role in a team, the pointers in these articles could help you. On the other hand if you’re looking to learn Machine Learning and looking for a starter, this is not it.

I also should note that some of the methodologies and practices I mention related to Data Science may not be the way you might be practicing at your team. The more I worked with the Data Scientists, the more I could establish a common vocabulary for concepts that both of us would refer to. These terms could be a bit different from your usage as well. I’m a software developer and will probably never be a data scientist.

When I refer to “software development” in these articles, I’m referring to the other types of software development and operational aspects of the projects and products that do not have Artificial Intelligence or Machine Learning components to them. While I’m being a little pedantic here, I don’t like the phrase “traditional software engineering” that similar articles use. Software Engineering is the umbrella term for all types of such practices and Machine Learning projects in the industry are part of Software Engineering as a practice.

Putting everything into a single article quickly became a bad idea, so I have split this into a series of smaller ones. I will be updating this post in the future with all the links at the top for easy access.

Edit down the line: I tried to keep the series provider-agnostic, but halfway through it, I could see that at least some concrete real-world examples of features have to be explained. In the above mentioned project I worked with GCP Vertex and Azure ML Studio, however I’ve used Amazon SageMaker as the reference provider since AWS is what I work with the most these days. My work also involves AWS a lot, so remember to filter the bias.

ML and Learning the Basics

When you’re working in a multi-disciplinary team, the key quality to build first is a common language for communication. This would take priority over even the functional goals of the team since you need to be able to work efficiently together towards a common goal. As a non-ML engineer, this was the first challenge in my job.

I’m guessing most readers would have an understanding of what Machine Learning is. For me other than a Data Mining module I did in the university, I had no clear idea what ML was in the current landscape. It’s practically impossible to avoid coming across the phrases “AI”, “Model”, “Deep Learning” on a daily basis unless you live under a rock these days (Edge AI and IPv6 could fix that), but a decade old memory on working through a KNN example isn’t the greatest qualification for working on ML.

I dedicated at least a month of extra work on building an understanding of what AI and ML is, and the “language” the Data Scientists use. I followed the age-old “try building a model at first, fail and go back to learning” method to the letter. After writing Python code to build a simple binary classification model (I didn’t know this at the time) and not understanding why each line of code was doing what it did, I stepped down to learning basic ML. The Google Machine Learning Youtube Playlist was helpful here to get a good start. It’s old so there could be a few API changes in the libraries that you’ll be using, but nothing a simple web search can’t fix.

You could also start with the Standford CS229 ML Course on Youtube but I do not recommend getting bogged down in details at these early stages. However, once you have some experience working with ML, revisiting this is recommended. Andrew Ng is a giant in this space and brings a lot of knowledge and light into the learning.

I also found that I needed to learn basic statistics and mathematics needed for ML. A few playlists in StatQuest Youtube channel helped a lot in this case. The author has also published a book on the topic which can be helpful to any beginner.

How is Machine Learning Different?

Why does Machine Learning need a different perspective compared to software development? Because Machine Learning as a practice has the following key differences from other software development practices.

End Product

In software development solutions, the end product is a binary or a collection of binaries and associated artefacts. These are typically produced as a result of a build pipeline that compiles the source code and any supporting artefacts together and produces consistent results for a given version of the source code. By design, these artefacts for a given version should be reproducible. The released binary or package has a clear progression of features and bug-fixes in the release timeline.

In Machine Learning, the build result is a Model, which is a sweet spot in the problem’s solution space. This point is hard to reproduce in multiple builds of the same version of the source code. This is mostly because source code is just one part of the build process in Machine Learning. A build, or a Model training in Machine Learning, is using a data set that potentially contains a pattern (or called a hypothesis), and source code that determines how to traverse the data set to find this pattern which can be used to generate predictions on related but never before seen data sets, or as called later real world data. While the source code and the data set can be the same for a given two training runs, the pattern the algorithm ends up with could be different.

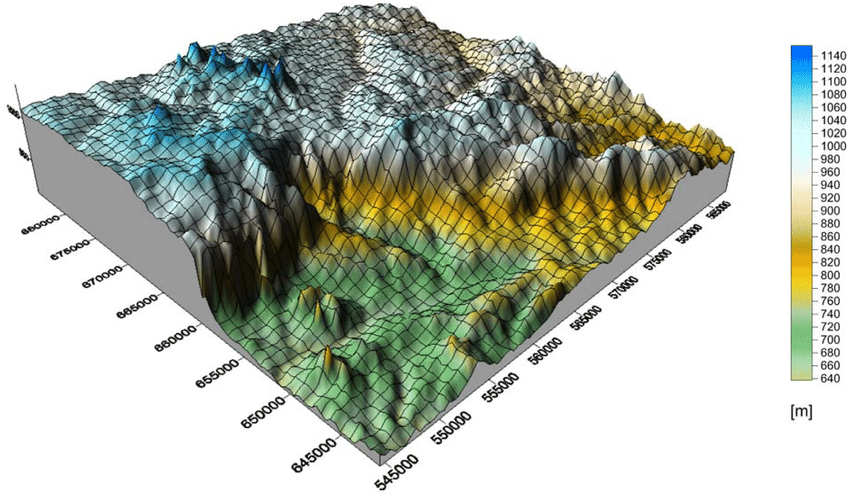

Image from Analysis of a wind turbine project in the city of Bouar (Central African Republic) - Scientific Figure on ResearchGate. Available from: https://www.researchgate.net/figure/3D-Elevation-map-of-Bangui-outskirts_fig7_340074762 [accessed 22 Nov, 2022]

Imagine you have at your table a physical model of one of those topographic maps of a mountainous terrain. You’d have mountain tops, valleys, and one or two trenches. You are tasked to find the lowest point (minima) in the map by only making finite small incremental steps of touches with your eyes closed. Each round starts with a clean state with you having to forget what you learnt from the earlier round (somehow, I don’t know, let’s say hypnosis is involved). In this case, you would find low points in the map just by touching, but multiple rounds would probably result in you deciding different valleys or trenches being the lowest point (you can’t undo your steps), which are not the actual lowest point (local minima, not the true minima). You might even find the actual lowest point at one round as well. You can change factors like the distance each step would travel (the distance between two touch points), or the finite number of steps for each round.

Think of training a Model as trying to find the “lowest” point in the solution space by a machine which has no vision, no memory between the rounds (training jobs), and no way to determine if it has actually reached the real lowest point. Each “round” or training job could end up on an good solution spot, but it might not be the optimal point, and you might not end up on the same spot twice.

If you’re a Data Scientist, you might go “well, that’s not the entire picture” at this point. That is true, but I’m using this description for two reasons. It’s better to keep concepts simple for the learning experience. Most engineers who are not Data Scientists will be able to contribute without having an understanding of the other definitions and use cases (most of the time). You might also go “that’s wrong!”. That’s because I don’t know better. For an introduction I think this is good enough.

Understanding Data

Observations made on a given problem and its possible solutions has a high importance in all software development. In fact, in software development understanding the problem and possible ways of solving is key to writing good software and doing it efficiently. However, other than structured test data, data points of the problem wouldn’t normally play a key role in actual software development.

In Machine Learning, data is the problem. Solving it involves figuring out the “class” of the problem, and figuring out possible approaches to solving it. To do this, understanding the data set involved and the potential data set the Model will have to work with is critical.

To do this, the input data should be cleaned and only the important data should be focused on. Most of the time, Machine Learning works on Data Lakes with unorganised dumps of data, collected hoping future Machine Learning and Data Engineering work would be able to use them. This data should be cleaned up, normalised, and made into formats that Machine Learning understands and can work with. To do this effectively, a good understanding of the problem is important, since “cleaning up” data can mean different things with different problems that can be solved with the same data set.

Data is worked on as columns rather than rows, which is important when working with big data for analytical purposes. Efficient columnar storage, retrieval, and querying is probably solved at this stage of the solution. Each column that might be of interest to the problem is known as a Feature. Getting the right set of Features as input to a problem solving algorithm is important as well. Too little, and your solution is plain wrong as it fails to capture the whole picture. Too much, and you might be wasting precious time and compute power on details that don’t really matter. This is known as the Curse of Dimensionality since trying to find a pattern in a multi-dimensional problem space becomes increasingly difficult as the number of dimensions increase.

It is critical that this phase is done right. The entire solution could be influenced by decisions made in this phase, or as the practitioners say “garbage in, garbage out”. For an example cleaning up data in a way that rows with absent values for certain columns are dropped, could introduce a clear bias in the resulting Model. Likewise, selecting wrong Features, or Features with superficial patterns that don’t really contribute to the solution could waste resources and lose support for the entire project. Feature Engineering is a sub topic that works on best practices for identifying, selecting, and deriving new Features.

Amazon SageMaker helps in this aspect by making it possible to perform feature discovery and engineering in the Notebooks (we’ll be discussing what Notebooks are later) themselves with integrated library functions. The well-documented Git integration also allows packaging data transformation and loading logic as separate reusable functions so that different data sets and even different teams can reuse what has already been proven to work.

The scale of data that has to be processed is also something to point out. With the massive volume of data involved in Machine Learning, efficient data storage and retrieval are problems of their own. Different data management platforms have been offered to tackle enterprise data management such as Snowflake Data Warehousing and DataBricks Delta Lake that focus on data storage optimisation.

Amazon SageMaker takes a step further with it’s Streaming functionality to make the data available as quickly as possible without having to copy an entire data set across to the compute instance.

This part of the process is commonly known as Exploratory Data Analysis (EDA).

More comparison between Machine Learning and Software Development continued in the next article.