Point Sur, Cali 01

I just completed a mini article series on details of deploying an ELK stack on K8s. Following are the links to the series.

- ElasticSearch on K8s: 01 — Basic Design

- ElasticSearch on K8s: 02 — Log Collection with Filebeat

- ElasticSearch on K8s: 03 - Log Enrichment with Logstash

- ElasticSearch on K8s: 04 - Log Storage and Search with ElasticSearch

- ElasticSearch on K8s: 05 - Visualization and Production Readying

- ElasticSearch Index Management

- Authentication and Authorization for ElasticSearch: 01 - A Blueprint for Multi-tenant SSO

- Authentication and Authorization for ElasticSearch: 02 - Basic SSO with Role Assignment

- Authentication and Authorization for ElasticSearch: 03 - Multi-Tenancy with KeyCloak and Kibana

So far, the articles have been discussing points related to functional requirements of a Log Aggregation stack deployed on K8s. The functionalities involved ELK core functions and how to mix and match K8s features to get the best out of the core ELK features. After getting the stack to receive and store logs, there are parallels that should be addressed. User management is one these critical features that this article will be discussing about.

Need for Authn/Authr for ELK

First of all, in this day and age, this shouldn’t be a matter of discussion at all. Every service should have a wrapper layer that does some kind of authentication and authorization of the user before granting access to the data the service offers. This should especially be true if the said services manage almost all significant data produced as side-effects of operations done on an enterprise platform. Therefore, ELK typically being the log aggregation stack of one or more systems in an organization, the log data should sit behind an authn/authr layer.

Authentication will make sure that the data access will be restricted to a set of authenticated users from a pre-determined user store. For an example, this could be the organization LDAP user store with an associated identity provider (ex: KeyCloak).

Authorization makes sure that only the pre-determined controlled access is given to the authenticated users. For an example, there could be departmental separation to access logs, such that logs produced by systems related to deptA should only be viewable by users from deptA and the Ops personal from the internal platform team. Furthermore, this could also be broken down to read-only access, an access level with a subset of write operations, and an access level with ability to perform all write operations.

When it comes to the ELK stack, both authentication and authorization is handled by features available from ElasticSearch. There are authentication realms that can be defined to make users authenticate before accessing indices. After authentication, there are roles and role mappings that can be used to authorize users into a correct set of permissions. All these features are available from a plugin named XPack that Elastic merged into the core product a few releases ago.

Note that some features that are discussed in this articles are only available in paid tiers of the ELK subscription model.

Need for SSO

By default, XPack allows to enable basic authentication as a way of user management for the ELK stack. However this means that the underlying user store will be on ElasticSearch and users will have to maintain separate accounts just to login to the logging platform. It would be good to reuse accounts that users have on the corporate platform (ex: the corporate LDAP store) for the logging platform authentication and authorization as well.

This is where SSO comes into play. Single-Sign On will allow to use the accounts provided by the corporate Identity Provider (or any other external Identity Provider) for the authn/authr purposes of other service providers. XPack plugin also has authentication realms that allow to configure SSO relationships between ELK and other IDPs through standards such as SAML and OIDC. These authentication realms also provide means to map users from external IDPs to internal ElasticSearch roles based on pre-defined criteria.

Multi-Tenancy in ELK

In the article related to Logstash and enrichment, an example strategy to divide incoming logs to different indices was discussed. In a more established setup, this would naturally extend to a point where different tenants of the system will produce logs that will end up in different indices. When that happens, data access should also be segregated so that users are not able to view logs from other tenants. This could also end up with a more complex user model where there are several layers of access that have to be granted to users depending on the scope of their control.

For an example, in a wider scoped setup, there could be tenant specific read-only roles (ex: for users like managers and HR), tenant specific admin roles (ex: for tenant specific support engineers), system wide read-only roles (ex: service provider’s sales personnel), system-wide semi-admin roles (ex: service provider support engineers, pre-sales engineers), and super-admin roles (ex: service provider cloud engineers). Each of these roles have to be granted based on the attributes provided by the authentication system, be it the internal ElasticSearch user store or an external IDP.

Designing a Solution

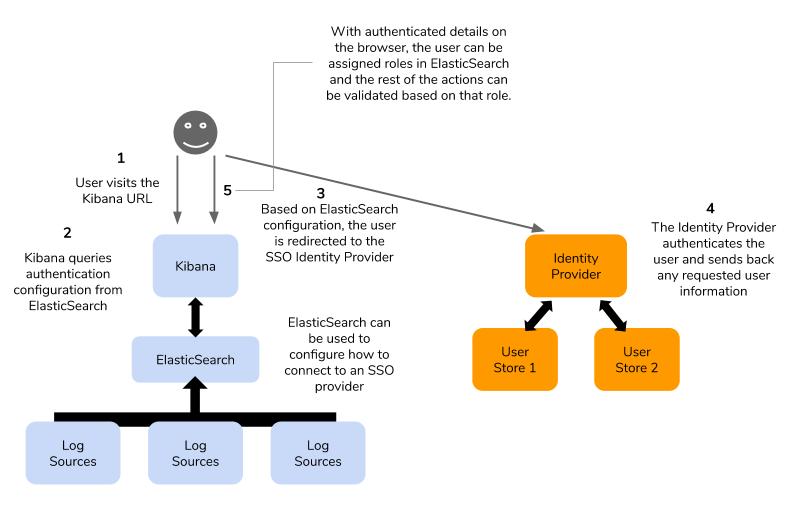

Based on the above requirements, a high-level design such as the following can be approached.

Breakdown of the flow

After such a design is implemented, the following flow should be possible.

- The user will start the flow by accessing the Kibana URL into the browser.

- After the connection is established, Kibana should check if the user is already logged in. If not, then the user should be redirected to the pre-configured external IDP to be authenticated.

- This redirection will probably be visible to the user as a browser redirection. The IDP should check if the user is logged into the IDP, if not, should present the login form.

- After successful authentication, the external IDP should send the user back to the Kibana UI. This would again be a browser redirection. There could also be additional information that both Kibana/ElasticSearch and the IDP will be preconfigured to share.

- With the received user information, ELK will grant the user an appropriate role through pre-defined role mappings. These roles will determine which actions the user will be allowed to perform on the ELK cluster through the Kibana UI.

In the next article(s), let’s explore how to implement this solution. We will be using a self-hosted KeyCloak server as our IDP with KeyCloak’s internal database as the user store.

We will be using SAML as the SSO protocol mostly because ELK’s support for OIDC has been weak at best (especially in their Cloud SaaS solution). However, the implementation details for an OIDC based solution should not be that much different after all.

Kibana, as the service provider, has support for multi-tenancy materialized as Kibana Spaces. However, at the time this article is being written, Kibana Spaces and SSO do not go well together as a fully-fledged multi-tenant solution (there are zero tenant discovery mechanisms available for SSO from Kibana’s point of view). We will also explore workarounds to implement a usable multi-tenant SSO system for ELK, using federated identity providers feature in KeyCloak.

Furthermore, we will also explore ways to implement complex user models on top of the SSO solution so that different roles can be assigned to different users based on certain granular rules.